Disclaimer:

The information provided on this blog is for educational purposes only. The use of hacking tools discussed here is at your own risk.

For the full disclaimer, please click here.

Sometimes, hacking isn’t as cinematic as Hollywood would have you believe. No dramatic music, no frantic typing, no glowing screens full of scrolling code. And often, it’s not even as technical as scanning networks, finding zero-days, or dropping shells.

In fact, some of the most effective hacks rely on one thing: being present and paying attention where others don’t.

Picture this: You’re working at a large organization. Every employee carries a badge — not just for access control, but also to buy coffee from sleek, connected machines scattered around the campus. No cash, no cards — just tap your badge and get your caffeine fix. Convenient.

To a hacker’s mind, this setup is a playground. A hundred ways to exploit it immediately come to mind: protocol fuzzing, hardware tampering, network sniffing, maybe even firmware injection. But what if you didn’t need any of that?

What if the simplest way in was… just watching?

The Target

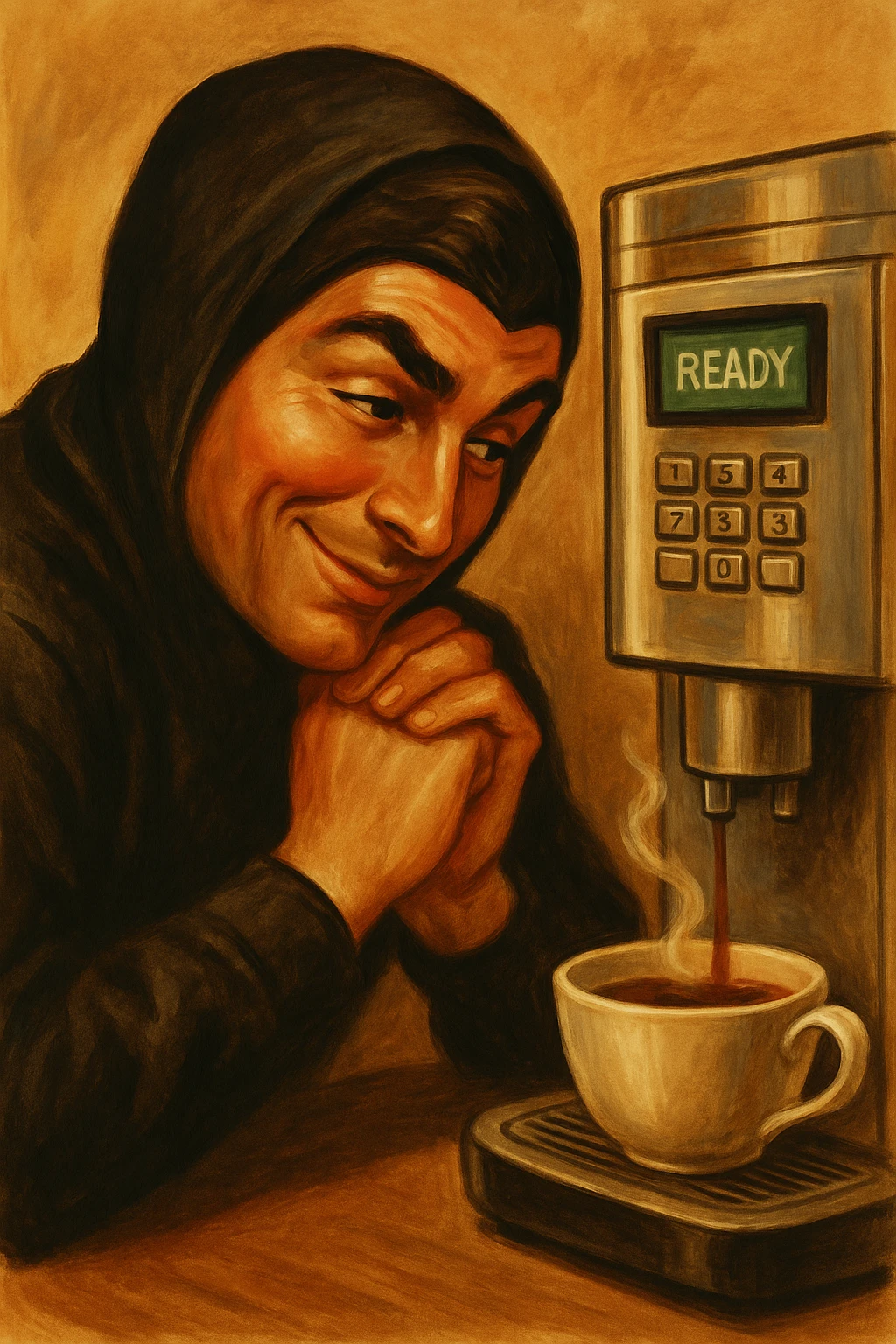

was a WMF 1100S, you can find these in a lot of hotels and company offices. Look familiar?

Disclaimer: All PINs shown in this post have long since been reset. In fact, the company has switched to entirely different coffee machines since then. Everything described here took place around five years ago.

Recon

So first, I started by examining the coffee machines themselves. What ports did they have? How did they accept input? Was there a user or service manual available?

When did maintenance usually happen? When were the machines being serviced?

From my office window, I actually had a pretty good view of the small kitchen area where one of the machines was located. I took notes.

While googling for standard PINs for these models, I came across a forum post that gave me a solid lead on a possible default PIN to try:

https://www.kaffee-netz.de/threads/wmf-1500-s.138683

That forum post was like striking gold.

The problem was I had no idea how to even access the admin menu to try the PIN 😅

And from my office window, I couldn’t quite see how the maintenance staff interacted with the machine either.

Social Engineering

One morning, I spotted the maintenance guy down the hall and decided to casually hang around the kitchen—pretending to “rinse my cup” and “grab some water”.

I was a few seconds too late to see how he accessed the menu, so I figured I’d just ask directly.

I made some small talk, then jokingly asked if he had some kind of secret hacker move to get into the machine. I played it light—curious and a bit impressed.

To my surprise, he told me: “You just tap the top corner of the touchscreen 10 times to open a hidden menu.“

After he left, I tried tapping all four corners—eventually, a login screen popped up asking for a PIN.

Since I already had a potential PIN from that forum post, I tried it right away.

No luck. ☹️

Brute Force

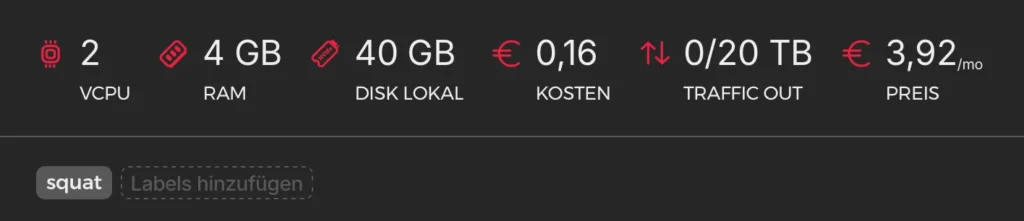

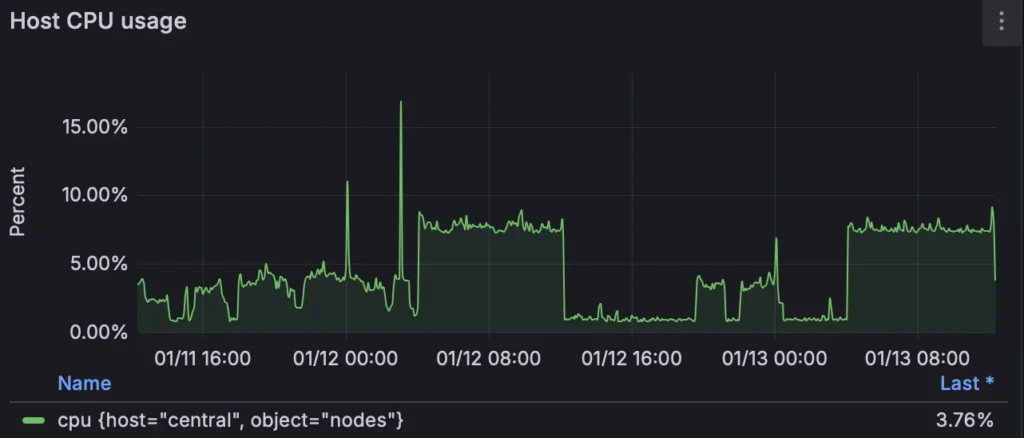

Later on, I went for a brute-force approach, but the machine only allowed 3 attempts every 15 minutes.

The PIN could be anywhere between 4 and 9 digits, so there were a lot of combinations using digits 0–9.

I started with the classic: 1111.

And… I was in. Just like that.

At first, I thought it must’ve been a glitch or that I had accidentally locked the machine. But then I navigated to the Billing/Reporting section, a menu only accessible to the highest-level admin, where I saw actual usage and sales stats.

That’s when I knew: I was definitely in.

My Notes

Free Coffee Is Close

Alright, now that I was in, the big question:

How do I actually get free coffee?

Digging through the documentation, I found a feature called “Testausgabe” (test dispense). It lets you run a test brew, normally just 50% the size of a regular drink.

So here’s the trick:

First, I went into the recipe settings and set the coffee amount to 200%.

Then, when the test brew ran, it dispensed a full-sized drink.

After that? I deleted the logs, logged out, wiped the screen for fingerprints—done.

Free coffee. No trace. (Except of course that logs were missing, but timestamps could be traced back to me, so it was better to just clear the log entirely)

What now?

I found myself in a bit of an ethical conundrum.

I now had access to free coffee across the entire company campus—probably over 100 machines.

The PIN was always the same: 1111.

No one would trace it back to me. And, truthfully, no one seemed to care enough to ever check the logs.

So… what would you do?

Unlimited free coffee. Only you know. Virtually untraceable.

But there was a catch:

This wasn’t an approved test. I had no written permission. And I had just started working at the company.

In Germany, during the first six months—the infamous Probezeit—you can be fired without any reason at all.

So if I reported the issue, which was obviously the right thing to do, there was still a real chance they’d see it as a breach of trust… and just let me go.

Coming Clean

When I was hired, I had been very upfront with my employer. I told them I was a Red Teamer before (😉) I ever got paid to be one.

They knew I had the mindset—the ability to think and act like a malicious actor, driven by what some might call “criminal energy.”

But that’s exactly why they brought me in: to channel those instincts into something useful, legal, and ideally even reportable (at least sometimes).

So I figured—this is exactly what they wanted me for. Time to do what I do best.

I reached out to someone fairly high up, someone I knew to be an ally of the cybersecurity team, and invited them to lunch.

They said yes.

After some casual conversation, I carefully steered the topic and asked:

“Wouldn’t it be kind of funny if someone figured out how to hack all the coffee machines?“

(I worded it slightly more diplomatically… but not by much, I prefer to be direct.)

– They thought it would be awesome if someone pulled that off.

That’s when I pulled out my iPad, opened a PDF I had prepared, and walked them through the entire process of how I did it, step by step.

They laughed and I knew I wasn’t getting fired. 🥳

A few days later, the department in charge of the coffee machines rotated all the PINs.

Level 2: Persistence Pays

At this point, most people probably would’ve called it a day. Grab a (paid) coffee, pat themselves on the back, and move on.

But I saw an opportunity to take things to the next level. 😁

I had forgotten the exact algorithm for generating the PINs mentioned in that forum post earlier, so I decided to go full brute-force again.

As before, you only get three attempts every 15 minutes—but I had access to four machines in my building. So whenever I went for coffee, I made a mini round and tried my luck on each one.

I experimented with common PINs, office room numbers, floor numbers, patterns—basically anything a rotating maintenance crew might use that’s easy to remember.

It was no use. The new PINs were better now.

After about two weeks of sporadic attempts, I gave up guessing.

Some time later, curiosity got the better of me again. I went back online, dug through my old searches, and finally found that forum post again.

It turns out the default PIN mentioned there was still relevant.

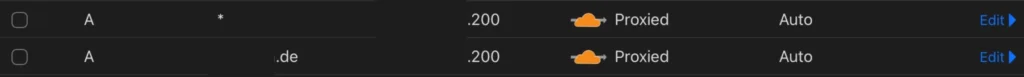

To make things easier for myself (and maybe to smile every time I got a coffee), I even built a tiny website that displayed it:cup.exploit.to

Here is the code and result:

<div id="reversed-date-container">

<strong id="reversed-date"></strong>

</div>

<script>

(function() {

function reverseString(str) {

return str.split('').reverse().join('');

}

function updateReversedDate() {

const now = new Date();

const day = String(now.getDate()).padStart(2, '0');

const month = String(now.getMonth() + 1).padStart(2, '0');

const hours = String(now.getHours()).padStart(2, '0');

const reversedDay = reverseString(day);

const reversedMonth = reverseString(month);

const reversedHours = reverseString(hours);

const reversedDate = `${reversedMonth} ${reversedDay} ${reversedHours}`;

const displayElement = document.getElementById('reversed-date');

if (displayElement) {

displayElement.textContent = reversedDate;

}

document.title = reversedDate;

}

updateReversedDate();

setInterval(updateReversedDate, 1000);

})();

</script>Level 3: Hotel Hacks & Buffet Logic

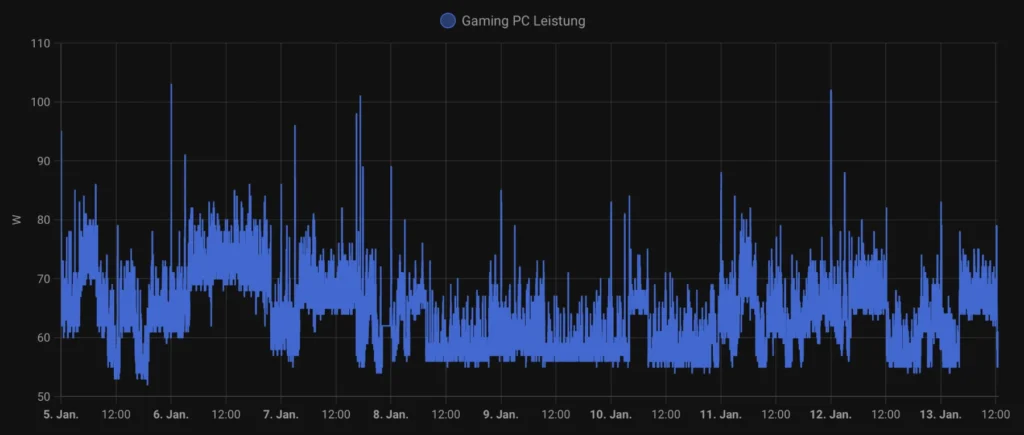

I was staying at a convention hotel where they had WMF coffee machines—just like the ones we used at work.

The machines were locked at night and early in the morning, only being unlocked during official meeting hours for free use.

Now, hotels tend to trigger two instincts in me:

- Buffet Maximization Mode – I feel an irrational urge to get my money’s worth (even though I’m not paying, the company is). So I go full-on strategy: all protein, no carbs, stretchy pants, and relentless focus.

- Hack Everything Mode – If it has a screen, a button, or a port… I’m curious.

These machines responded the same way—tap the top right corner of the screen 10 times, and you get the admin login prompt.

Unfortunately, none of the usual suspects worked—no standard codes, no default PINs like 1111, nothing.

So I did what any totally normal person would do:

I parked myself in a chair in front of the machines, opened my laptop, and pretended to be deep in code (as usual). As soon as the hotel staff came to unlock the machine, I casually watched the touch sequence.

What I saw was:

- top left

- middle

- middle

- top right

Once I tried it myself, that translated to 1-5-5-3.

Jackpot.

I could now unlock all the machines at the hotel—whenever I wanted.

Coffee was free anyway, so… no harm done. Just a little unauthorized efficiency.

More Hotel Hackery

A few years earlier, during another (different) hotel stay, we discovered that the lobby music system was powered by none other than… an Amazon Alexa.

Naturally, we got curious—and a bit mischievous.

It turned out the Alexa device was linked to a fully funded Amazon Prime account. And how did we find that out?

By politely asking it to order 10 kilograms of Weißwurst.

Alexa, of course, doesn’t place the order immediately anymore.

Instead, it replied cheerfully that the item had been added to the shopping cart.

That was all the confirmation we needed.

From there, we added anything remotely Bavarian we could think of: pretzels, beer steins, lederhosen—you name it.

The cart was starting to look like Oktoberfest.

For even more hotel hacking stories check out my post:

Conclusion

Go hack stuff, steal coffee—they probably won’t fire you for it.

(Totally a joke. Seriously, don’t do this. Ever.)

But picture this:

Someone breaks into your apartment, makes themselves a cup of coffee, stares you dead in the eyes, and walks out—without saying a word.

Maybe they even slam the door behind them.

Sometimes they tell you to refill the beans before they’re back… or complain the coffee was weak.

Yeah.

Not that funny anymore, is it?

Well, that’s basically what’s happening here.

Okay, I’m being a bit dramatic. But for the record:

Everything I did was disclosed responsibly. I had permission.

No one got hurt. No machines were broken. And both the hotel and the company actually fixed the issues.

Even the service and maintenance staff got something out of it:

They realized they could be manipulated—and that they’re part of the attack surface, too.

The system got stronger and I got my coffee.

Okay, my little sweetiepies, that’s it for this one.

Hope to see you back soon—tell your parents I said hi.

And as always: loooove youuuuu 💘

Stay caffeinated. Stay curious.