Ever ask your mom for a shiny new Google, only to hear:

We have Google at home, son!

and then she proudly shows you her self-hosted SearXNG instance?

Yeah… me neither.

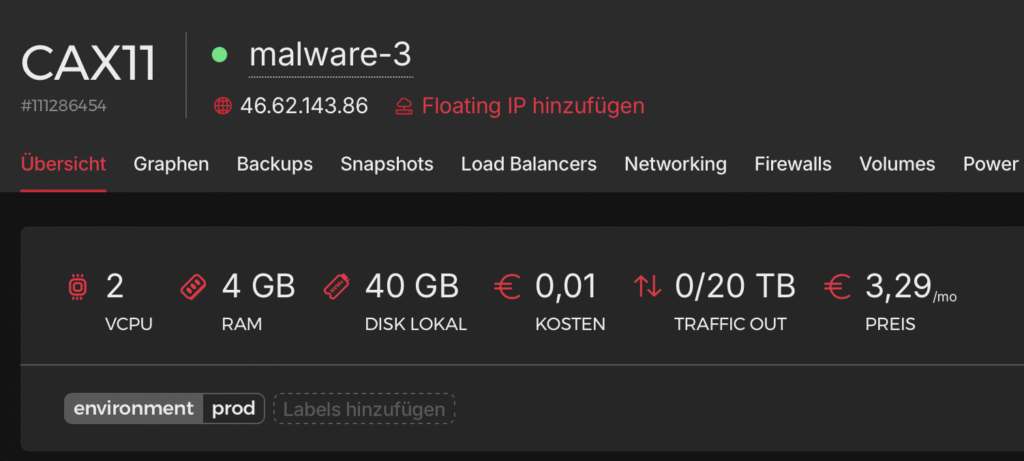

But today, let me play that role for you and introduce you to my very own SearXNG setup.

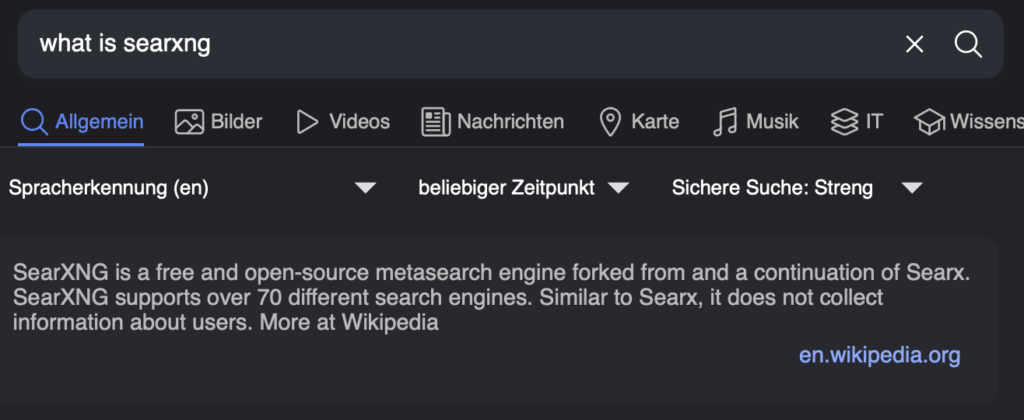

What is SearXNG ?

In other words (ChatGPTs):

SearxNG is a privacy-friendly meta-search engine. Instead of being one search engine like Google, it asks lots of engines at once (Google, Bing, Wikipedia, etc.) and shows you all the results together, without ads, tracking, or profiling.

Think of it like calling ten friends for advice instead of one, but none of them know who you are. 🤫 (kind of like you and I, fren ❤️)

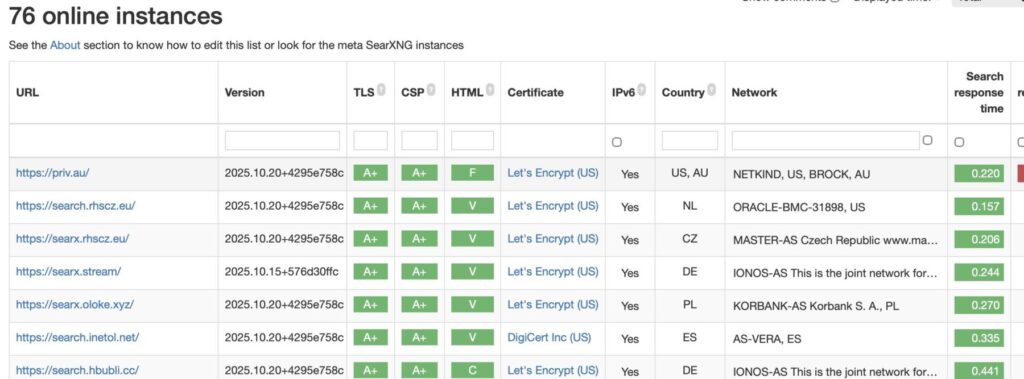

Despite the intro, you don’t have to self-host SearXNG, a lot of people host an instance for you you can use, there is a directory here: https://searx.space

Self-Hosting SearXNG

Of course we’re hosting it ourselves, trusting someone else with your searches? Ha! Not today.

I run a Proxmox server at home (something I’ve rambled about in other posts). For my current SearXNG instance, I pretty much just used this script:

👉 Proxmox Community SearXNG Script

The Proxmox Community Scripts page is a gem, it makes spinning up your own VMs or containers as simple as a single bash command. The catch is that you are running random scripts from the internet on your system…ewww. Reviewing them is usually so annoying that if you’re truly paranoid, you might as well build it yourself.

Sure, you could go the Docker route, but then you’ve got to audit the Dockerfile too. Pick your poison. Personally, I stick with Proxmox Community Scripts, but I also keep a close eye with Wazuh, honeypots, and Grafana+Loki. Any network call I didn’t make or plan,I hear about it immediately.

Docker Option

If you prefer Docker, SearXNG has an official repo with a handy docker-compose file:

At the time of writing, the compose file looks like this:

services:

caddy:

container_name: caddy

image: docker.io/library/caddy:2-alpine

network_mode: host

restart: unless-stopped

volumes:

- ./Caddyfile:/etc/caddy/Caddyfile:ro

- caddy-data:/data:rw

- caddy-config:/config:rw

environment:

- SEARXNG_HOSTNAME=${SEARXNG_HOSTNAME:-http://localhost}

- SEARXNG_TLS=${LETSENCRYPT_EMAIL:-internal}

logging:

driver: "json-file"

options:

max-size: "1m"

max-file: "1"

redis:

container_name: redis

image: docker.io/valkey/valkey:8-alpine

command: valkey-server --save 30 1 --loglevel warning

restart: unless-stopped

networks:

- searxng

volumes:

- valkey-data2:/data

logging:

driver: "json-file"

options:

max-size: "1m"

max-file: "1"

searxng:

container_name: searxng

image: docker.io/searxng/searxng:latest

restart: unless-stopped

networks:

- searxng

ports:

- "127.0.0.1:8080:8080"

volumes:

- ./searxng:/etc/searxng:rw

- searxng-data:/var/cache/searxng:rw

environment:

- SEARXNG_BASE_URL=https://${SEARXNG_HOSTNAME:-localhost}/

logging:

driver: "json-file"

options:

max-size: "1m"

max-file: "1"

networks:

searxng:

volumes:

caddy-data:

caddy-config:

valkey-data2:

searxng-data:Honestly, I wish I had some epic war stories about running SearXNG… but it’s almost disappointingly easy 😂. I just left the standard settings as they are, no tweaks, no drama.

SearXNG API

Now here’s the fun part: the API.

In my opinion, the sexiest feature of SearXNG is its built-in search API. Normally, you’d have to pay through the nose for this kind of functionality to power your OSINT workflows, AI tools, or random scripts. With SearXNG, you get it for free. (Okay, technically the search engines themselves apply rate limits, but still, that’s a sweet deal.)

Enabling it is dead simple. Just flip the switch in your config:

nano /etc/searxng/settings.ymlAdd:

search:

safe_search: 2

autocomplete: 'google'

formats:

- html

- json # <- THIS!Boom 💥 you’ve got yourself a free, self-hosted search API you can use like so:

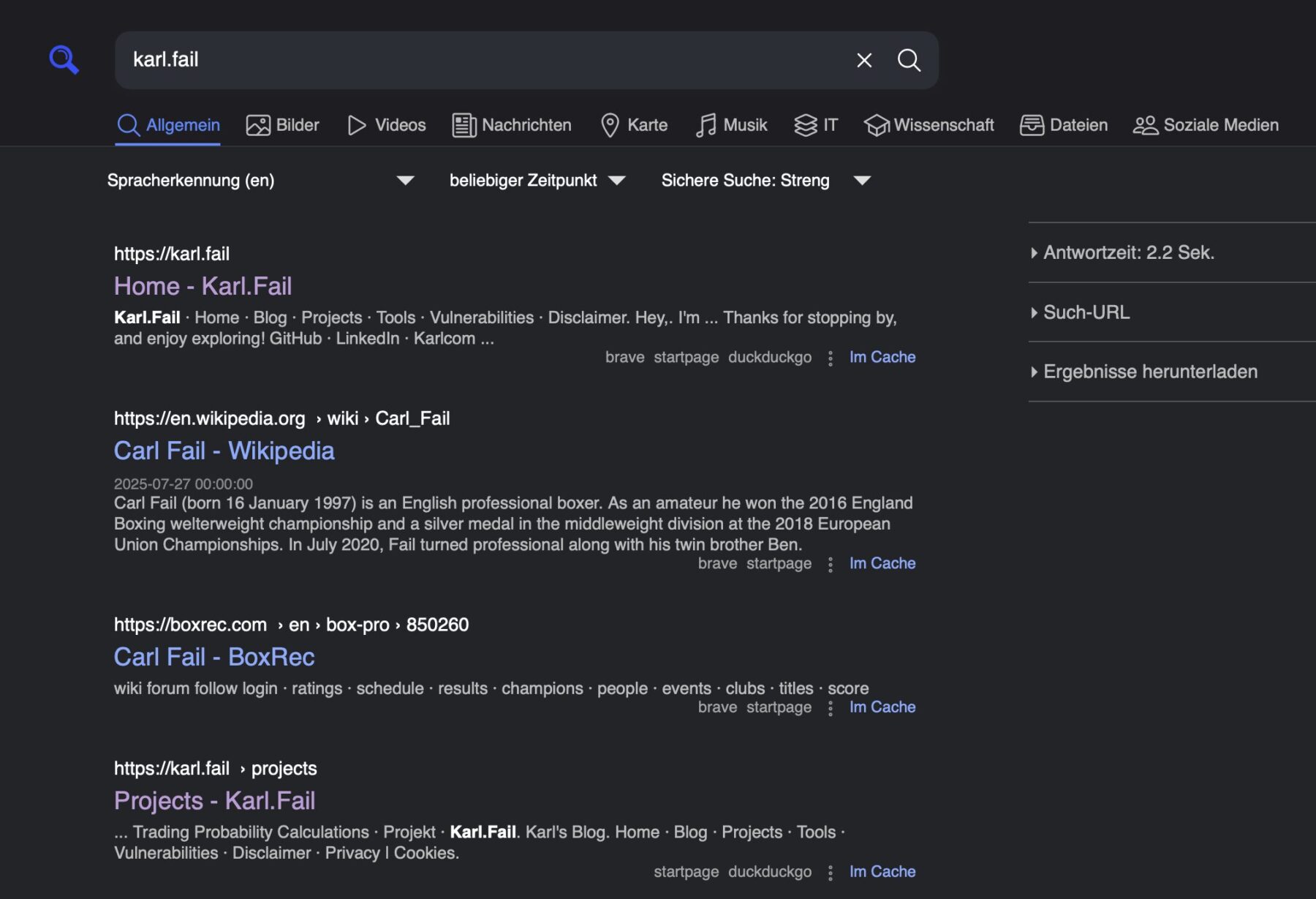

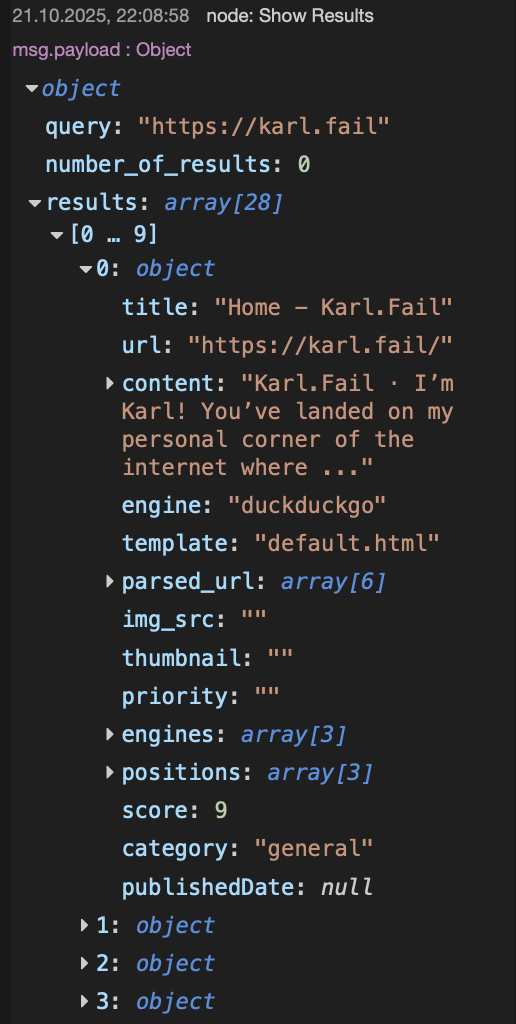

https://your-search.instance/search?q=karl.fail&format=json

{"query": "karl.fail", "number_of_results": 0, "results": [{"url": "https://karl.fail/", "title": "Home - Karl.Fail", "content": "Karl.Fail \u00b7 Home \u00b7 Blog \u00b7 Projects \u00b7 Tools \u00b7 Vulnerabilities \u00b7 Disclaimer. Hey,. I'm ... Thanks for stopping by, and enjoy exploring! GitHub \u00b7 LinkedIn \u00b7 Karlcom\u00a0...", "publishedDate": null, "thumbnail": "", "engine": "brave", "template": "default.html", "parsed_url": ["https", "karl.fail", "/", "", "", ""], "img_src": "", "priority": "", "engines": ["brave", "startpage", "duckduckgo"], "positions": [1, 1, 1], "score": 9.0, "category": "general"}, {"url": "https://en.wikipedia.org/wiki/Carl_Fail", "title": "Carl Fail - Wikipedia", "content": "Carl Fail (born 16 January 1997) is an English professional boxer. As an amateur he won the 2016 England Boxing welterweight championship and a silver medal in the middleweight division at the 2018 European Union Championships. In July 2020, Fail turned professional along with his twin brother Ben.", "publishedDate": "2025-07-27T00:00:00", "thumbnail": "", "engine": "brave", "template": "default.html", "parsed_url": ["https", "en.wikipedia.org", "/wiki/Carl_Fail", "", "", ""], "img_src": "", "priority": "", "engines": ["brave", "startpage"],

.......When you query the API, you’ll get a nice clean JSON response back. (I trimmed this one down so you don’t have to scroll forever.)

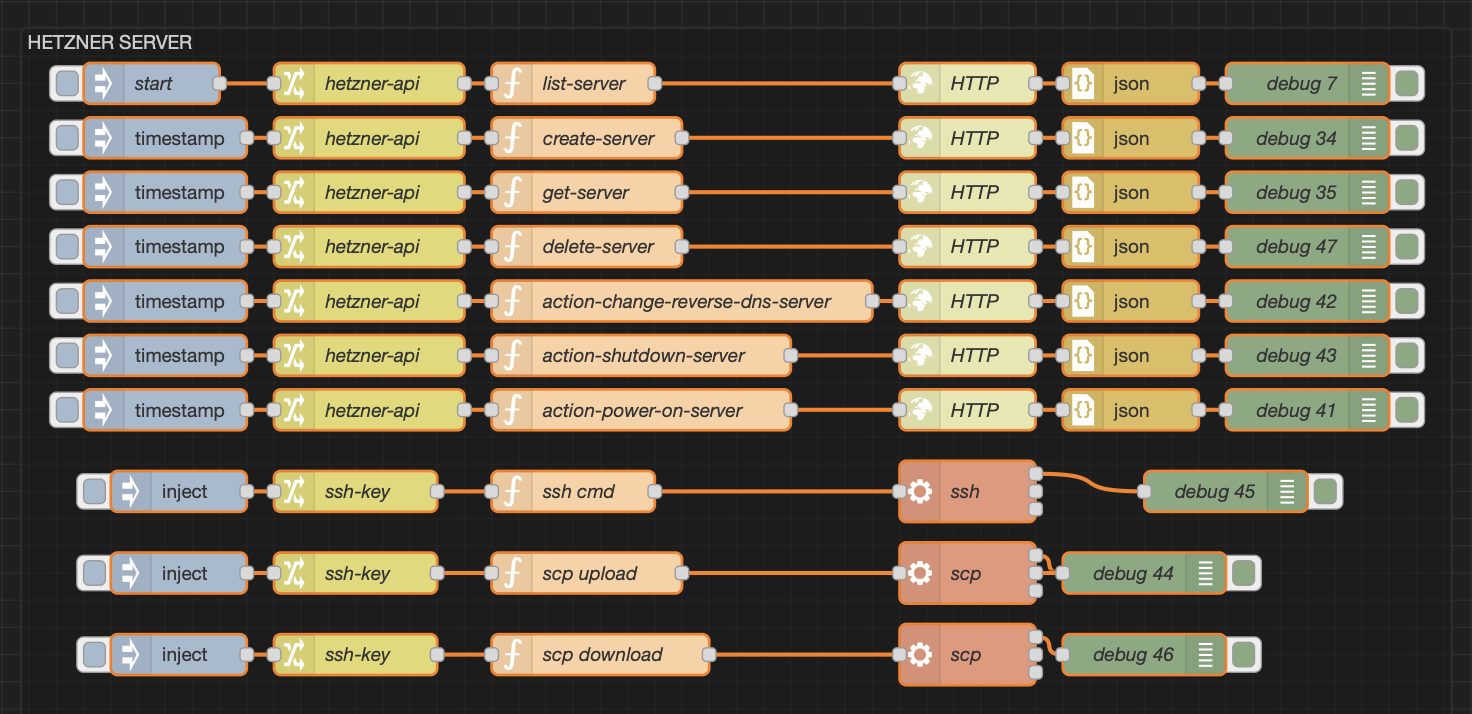

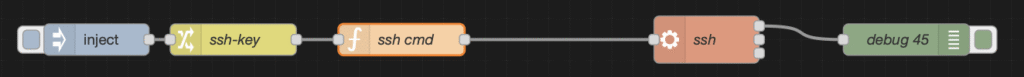

Node-RED + SearXNG

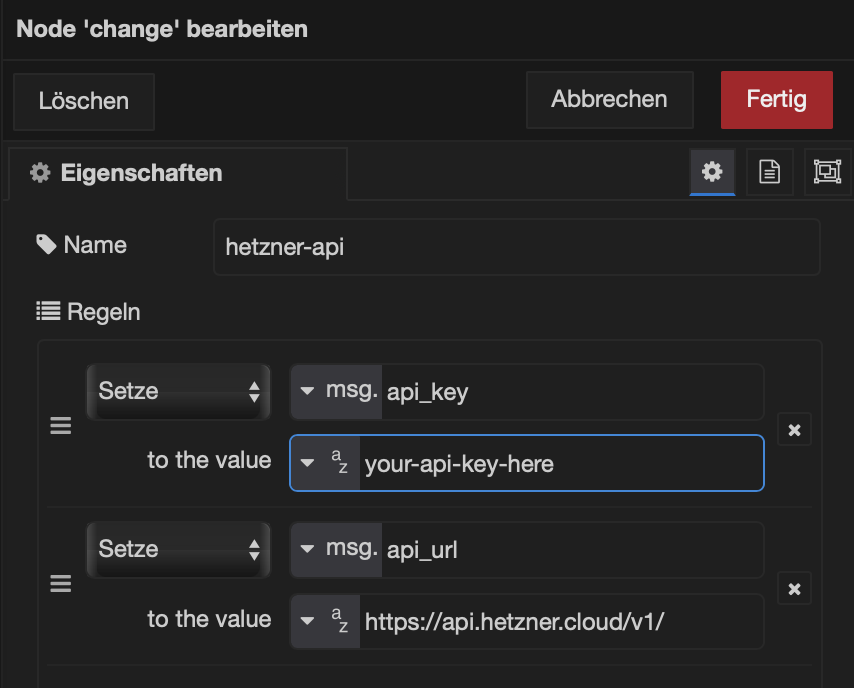

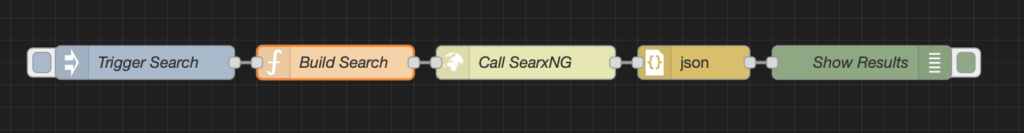

And this is where things get fun(ner). Instead of just running curl commands, you can wire up SearXNG directly into Node-RED. That means you can chain searches into automations, OSINT pipelines, or even goofy side projects, without touching a line of code (except copy and pasting mine, you sly dog).

There are countless ways to use SearXNG, either as your daily driver for private search, or as a clean JSON API powering your tools, OSINT automations, and little gremlins you call “scripts.”

Let me show you a quick Node-RED function node:

const base = "https://your.domain/search";

const qs = "q=" + encodeURIComponent(msg.payload )

+ "&format=json"

+ "&pageno=1";

msg.method = "GET";

msg.url = base + "?" + qs;

msg.headers = { "Accept": "application/json" };

return msg;msg.payload = your search term. Everything else just wires the pieces together:

Flow:

Inject → Function → HTTP Request → JSON → Debug

When you run the flow, you’ll see the results come back as clean JSON. In my case, it even found my own website and, as a bonus, it tells you which engine returned the hit (shout-out to “DuckDuckGo“).

Pretty cool. Pretty simple. And honestly, that’s the whole magic of SearXNG: powerful results without any unnecessary complexity

Summary

This was a quick tour of a seriously awesome tool. These days there are plenty of privacy-friendly search engines, you can trust them… or not 🤷♂️. The beauty of SearXNG is that you don’t have to: you can just host your own.

For the OSINT crowd (especially the developer types), this can be a real game-changer. Automate your dorks, feed the results into your local LLM, and suddenly you’ve got clean, filtered intelligence with almost no effort.

Whatever your use case, I highly recommend giving SearXNG a try. Show the project some love: star it, support it, spread the word, tell your mom about it and tell her I said hi 👋.