The name Squidward comes from TAD → Threat Modelling, Attack Surface and Data. “Tadl” is the German nickname for Squidward from SpongeBob, so I figured—since it’s kind of a data kraken—why not use that name?

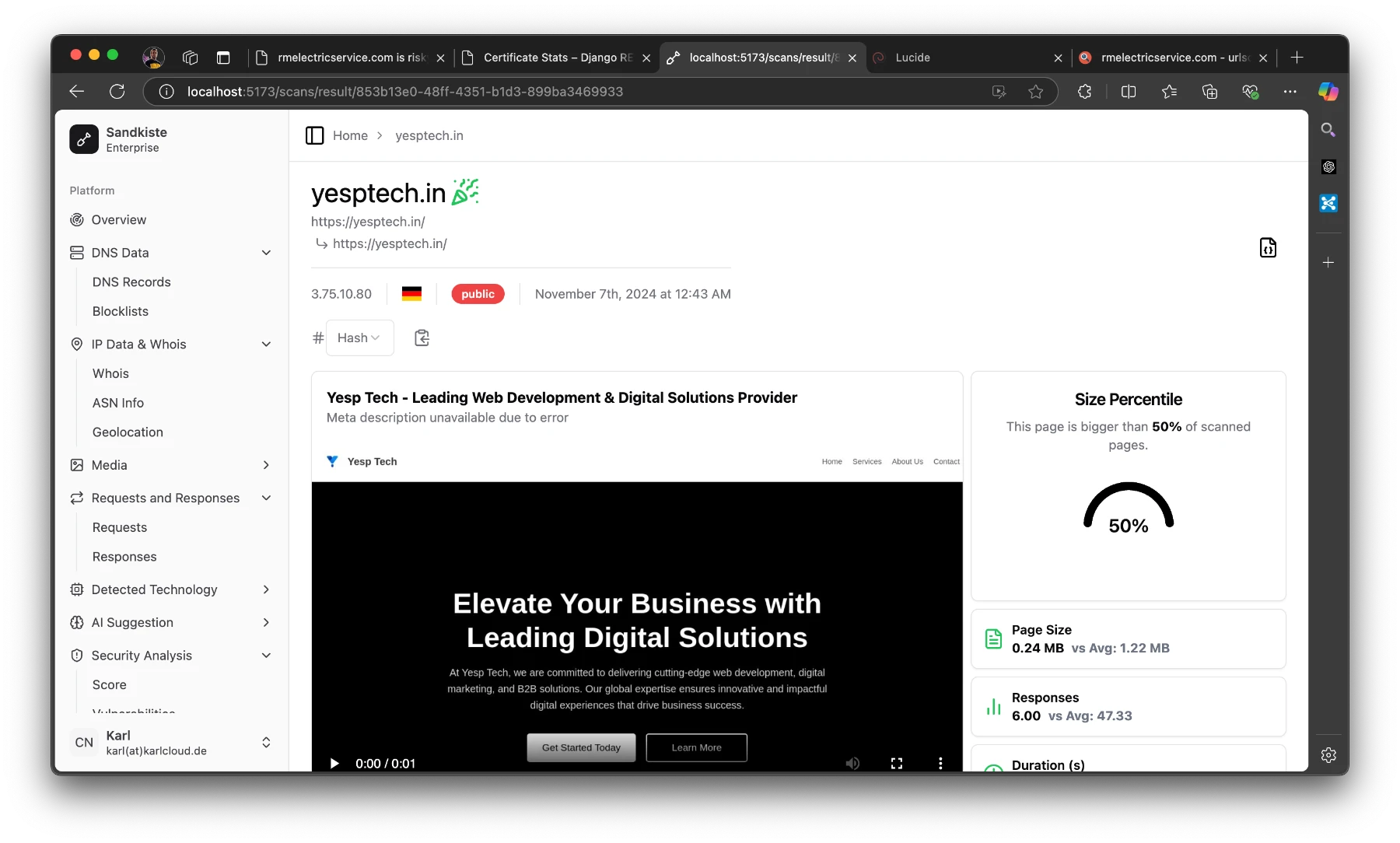

It’s a continuous observation and monitoring script that notifies you about changes in your internet-facing infrastructure. Think Shodan Monitor, but self-hosted.

Technology Stack

- certspotter: Keeps an eye on targets for new certificates and sneaky subdomains.

- Discord: The command center—control the bot, add targets, and get real-time alerts.

- dnsx: Grabs DNS records.

- subfinder: The initial scout, hunting down subdomains.

- rustscan: Blazing-fast port scanner for newly found endpoints.

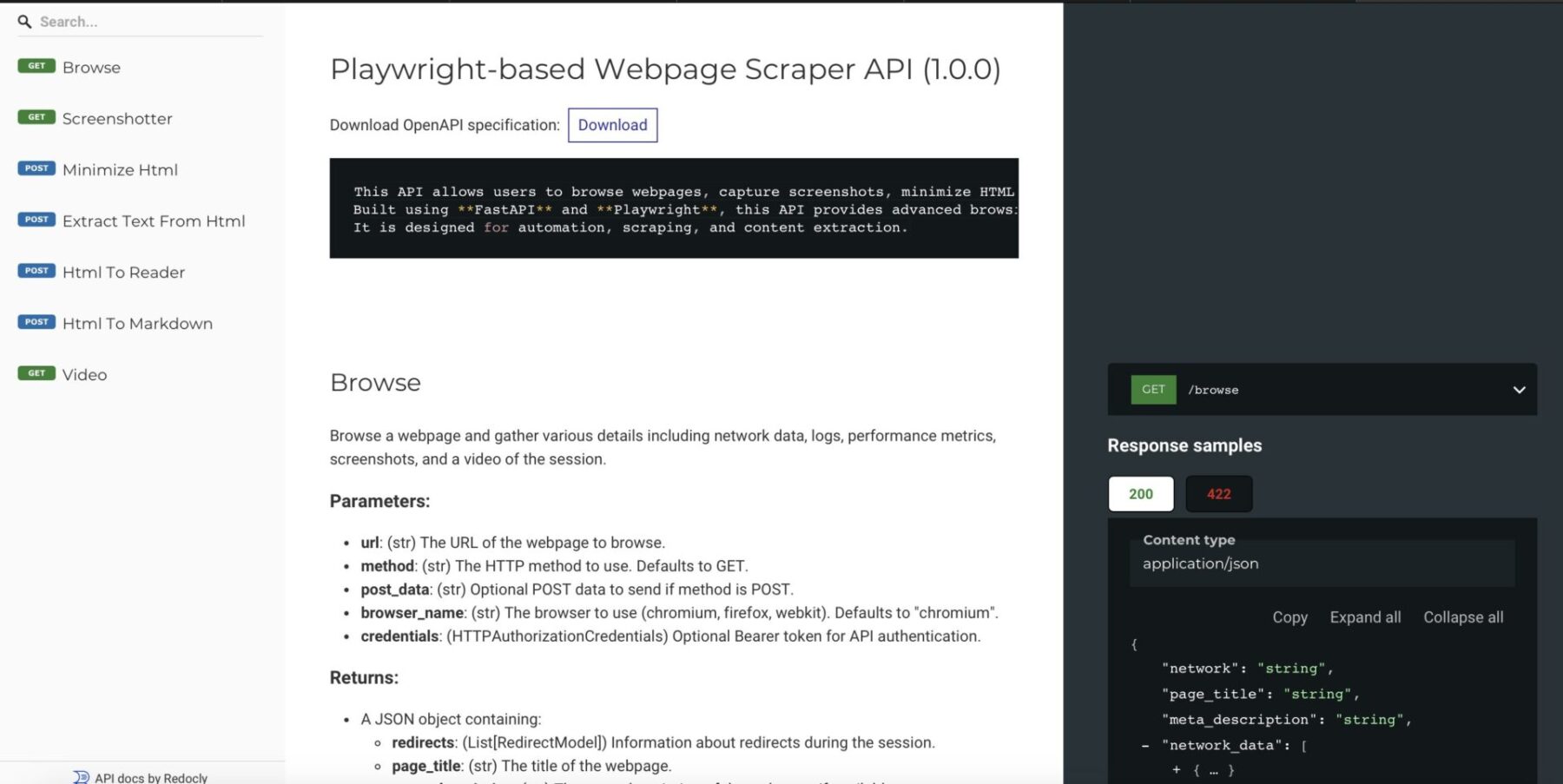

- httpx: Checks ports for web UI and detects underlying technologies.

- nuclei: Runs a quick vulnerability scan to spot weak spots.

- anew: Really handy deduplication tool.

At this point, I gotta give a massive shoutout to ProjectDiscovery for open-sourcing some of the best recon tools out there—completely free! Seriously, a huge chunk of my projects rely on these tools. Go check them out, contribute, and support them. They deserve it!

(Not getting paid to say this—just genuinely impressed.)

How it works

I had to rewrite certspotter a little bit in order to accomodate a different input and output scheme, the rest is fairly simple.

Setting Up Directories

The script ensures required directories exist before running:

- $HOME/squidward/data for storing results.

- Subdirectories for logs: onlynew, allfound, alldedupe, backlog.

Running Subdomain Enumeration

- squidward (certspotter) fetches SSL certificates to discover new subdomains.

- subfinder further identifies subdomains from multiple sources.

- Results are stored in logs and sent as notifications (to a Discord webhook).

DNS Resolution

dnsx takes the discovered subdomains and resolves:

- A/AAAA (IPv4/IPv6 records)

- CNAME (Canonical names)

- NS (Name servers)

- TXT, PTR, MX, SOA records

HTTP Probing

httpx analyzes the discovered subdomains by sending HTTP requests, extracting:

- Status codes, content lengths, content types.

- Hash values (SHA256).

- Headers like server, title, location, etc.

- Probing for WebSocket, CDN, and methods.

Vulnerability Scanning

- nuclei scans for known vulnerabilities on discovered targets.

- The scan focuses on high, critical, and unknown severity issues.

Port Scanning

- rustscan finds open ports for each discovered subdomain.

- If open ports exist, additional HTTP probing and vulnerability scanning are performed.

Automation and Notifications

- Discord notifications are sent after each stage.

- The script prevents multiple simultaneous runs by checking if another instance is active (ps -ef | grep “squiddy.sh”).

- Randomization (shuf) is used to shuffle the scan order.

Main Execution

If another squiddy.sh instance is running, the script waits instead of starting.

- If no duplicate instance exists:

- Squidward (certspotter) runs first.

- The main scanning pipeline (what_i_want_what_i_really_really_want()) executes in a structured sequence:

The Code

I wrote this about six years ago and just laid eyes on it again for the first time. I have absolutely no clue what past me was thinking 😂, but hey—here you go:

#!/bin/bash

#############################################

#

# Single script usage:

# echo "test.karl.fail" | ./httpx -sc -cl -ct -location -hash sha256 -rt -lc -wc -title -server -td -method -websocket -ip -cname -cdn -probe -x GET -silent

# echo "test.karl.fail" | ./dnsx -a -aaaa -cname -ns -txt -ptr -mx -soa -resp -silent

# echo "test.karl.fail" | ./subfinder -silent

# echo "test.karl.fail" | ./nuclei -ni

#

#

#

#

#############################################

# -----> globals <-----

workdir="squidward"

script_path=$HOME/$workdir

data_path=$HOME/$workdir/data

only_new=$data_path/onlynew

all_found=$data_path/allfound

all_dedupe=$data_path/alldedupe

backlog=$data_path/backlog

# -----------------------

# -----> dir-setup <-----

setup() {

if [ ! -d $backlog ]; then

mkdir $backlog

fi

if [ ! -d $only_new ]; then

mkdir $only_new

fi

if [ ! -d $all_found ]; then

mkdir $all_found

fi

if [ ! -d $all_dedupe ]; then

mkdir $all_dedupe

fi

if [ ! -d $script_path ]; then

mkdir $script_path

fi

if [ ! -d $data_path ]; then

mkdir $data_path

fi

}

# -----------------------

# -----> subfinder <-----

write_subfinder_log() {

tee -a $all_found/subfinder.txt | $script_path/anew $all_dedupe/subfinder.txt | tee $only_new/subfinder.txt

}

run_subfinder() {

$script_path/subfinder -dL $only_new/certspotter.txt -silent | write_subfinder_log;

$script_path/notify -data $only_new/subfinder.txt -bulk -provider discord -id crawl -silent

sleep 5

}

# -----------------------

# -----> dnsx <-----

write_dnsx_log() {

tee -a $all_found/dnsx.txt | $script_path/anew $all_dedupe/dnsx.txt | tee $only_new/dnsx.txt

}

run_dnsx() {

$script_path/dnsx -l $only_new/subfinder.txt -a -aaaa -cname -ns -txt -ptr -mx -soa -resp -silent | write_dnsx_log;

$script_path/notify -data $only_new/dnsx.txt -bulk -provider discord -id crawl -silent

sleep 5

}

# -----------------------

# -----> httpx <-----

write_httpx_log() {

tee -a $all_found/httpx.txt | $script_path/anew $all_dedupe/httpx.txt | tee $only_new/httpx.txt

}

run_httpx() {

$script_path/httpx -l $only_new/subfinder.txt -sc -cl -ct -location -hash sha256 -rt -lc -wc -title \

-server -td -method -websocket -ip -cname -cdn -probe -x GET -silent | write_httpx_log;

$script_path/notify -data $only_new/httpx.txt -bulk -provider discord -id crawl -silent

sleep 5

}

# -----------------------

# -----> nuclei <-----

write_nuclei_log() {

tee -a $all_found/nuclei.txt | $script_path/anew $all_dedupe/nuclei.txt | tee $only_new/nuclei.txt

}

run_nuclei() {

$script_path/nuclei -ni -l $only_new/httpx.txt -s high, critical, unknown -rl 5 -silent \

| write_nuclei_log | $script_path/notify -provider discord -id vuln -silent

}

# -----------------------

# -----> squidward <-----

write_squidward_log() {

tee -a $all_found/certspotter.txt | $script_path/anew $all_dedupe/certspotter.txt | tee -a $only_new/forscans.txt

}

run_squidward() {

rm $script_path/config/certspotter/lock

$script_path/squidward | write_squidward_log | $script_path/notify -provider discord -id cert -silent

sleep 3

}

# -----------------------

send_certspotted() {

$script_path/notify -data $only_new/certspotter.txt -bulk -provider discord -id crawl -silent

sleep 5

}

send_starting() {

echo "Hi! I am Squiddy!" | $script_path/notify -provider discord -id crawl -silent

echo "I am gonna start searching for new targets now :)" | $script_path/notify -provider discord -id crawl -silent

}

dns_to_ip() {

# TODO: give txt file of subdomains to get IPs from file

$script_path/dnsx -a -l $1 -resp -silent \

| grep -oE "\b((25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\.){3}(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\b" \

| sort --unique

}

run_rustcan() {

local input=""

if [[ -p /dev/stdin ]]; then

input="$(cat -)"

else

input="${@}"

fi

if [[ -z "${input}" ]]; then

return 1

fi

# ${input/ /,} -> join space to comma

# -> loop because otherwise rustscan will take forever to scan all IPs and only save results at the end

# we could do this to scan all at once instead: $script_path/rustscan -b 100 -g --scan-order random -a ${input/ /,}

for ip in ${input}

do

$script_path/rustscan -b 500 -g --scan-order random -a $ip

done

}

write_rustscan_log() {

tee -a $all_found/rustscan.txt | $script_path/anew $all_dedupe/rustscan.txt | tee $only_new/rustscan.txt

}

what_i_want_what_i_really_really_want() {

# shuffle certspotter file cause why not

cat $only_new/forscans.txt | shuf -o $only_new/forscans.txt

$script_path/subfinder -silent -dL $only_new/forscans.txt | write_subfinder_log

$script_path/notify -silent -data $only_new/subfinder.txt -bulk -provider discord -id subfinder

# -> empty forscans.txt

> $only_new/forscans.txt

# shuffle subfinder file cause why not

cat $only_new/subfinder.txt | shuf -o $only_new/subfinder.txt

$script_path/dnsx -l $only_new/subfinder.txt -silent -a -aaaa -cname -ns -txt -ptr -mx -soa -resp | write_dnsx_log

$script_path/notify -data $only_new/dnsx.txt -bulk -provider discord -id dnsx -silent

# shuffle dns file before iter to randomize scans a little bit

cat $only_new/dnsx.txt | shuf -o $only_new/dnsx.txt

sleep 1

cat $only_new/dnsx.txt | shuf -o $only_new/dnsx.txt

while IFS= read -r line

do

dns_name=$(echo $line | cut -d ' ' -f1)

ip=$(echo ${line} \

| grep -E "\[(\b((25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\.){3}(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\b)\]" \

| grep -oE "(\b((25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\.){3}(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\b)")

match=$(echo $ip | run_rustcan)

if [ ! -z "$match" ]

then

ports_unformat=$(echo ${match} | grep -Po '\[\K[^]]*')

ports=${ports_unformat//,/ }

echo "$dns_name - $ip - $ports" | write_rustscan_log

$script_path/notify -silent -data $only_new/rustscan.txt -bulk -provider discord -id portscan

for port in ${ports}

do

echo "$dns_name:$port" | $script_path/httpx -silent -sc -cl -ct -location \

-hash sha256 -rt -lc -wc -title -server -td -method -websocket \

-ip -cname -cdn -probe -x GET | write_httpx_log | grep "\[SUCCESS\]" | cut -d ' ' -f1 \

| $script_path/nuclei -silent -ni -s high, critical, unknown -rl 10 \

| write_nuclei_log | $script_path/notify -provider discord -id nuclei -silent

$script_path/notify -silent -data $only_new/httpx.txt -bulk -provider discord -id httpx

done

fi

done < "$only_new/dnsx.txt"

}

main() {

dupe_script=$(ps -ef | grep "squiddy.sh" | grep -v grep | wc -l | xargs)

if [ ${dupe_script} -gt 2 ]; then

echo "Hey friends! Squiddy is already running, I am gonna try again later." | $script_path/notify -provider discord -id crawl -silent

else

send_starting

echo "Running Squidward"

run_squidward

echo "Running the entire rest"

what_i_want_what_i_really_really_want

# -> leaving it in for now but replace with above function

#echo "Running Subfinder"

#run_subfinder

#echo "Running DNSX"

#run_dnsx

#echo "Running HTTPX"

#run_httpx

#echo "Running Nuclei"

#run_nuclei

fi

}

setup

dupe_script=$(ps -ef | grep "squiddy.sh" | grep -v grep | wc -l | xargs)

if [ ${dupe_script} -gt 2 ]; then

echo "Hey friends! Squiddy is already running, I am gonna try again later." | $script_path/notify -provider discord -id crawl -silent

else

#send_starting

echo "Running Squidward"

run_squidward

fiThere’s also a Python-based Discord bot that goes with this, but I’ll spare you that code—it did work back in the day 😬.

Conclusion

Back when I was a Red Teamer, this setup was a game-changer—not just during engagements, but even before them. Sometimes, during client sales calls, they’d expect you to be some kind of all-knowing security wizard who already understands their infrastructure better than they do.

So, I’d sit in these calls, quietly feeding their possible targets into Squidward and within seconds, I’d have real-time recon data. Then, I’d casually drop something like, “Well, how about I start with server XYZ? I can already see it’s vulnerable to CVE-Blah.” Most customers loved that level of preparedness.

I haven’t touched this setup in ages, and honestly, I have no clue how I’d even get it running again. I would probably go about it using Node-RED like in this post.

These days, I work for big corporate, using commercial tools for the same tasks. But writing about this definitely brought back some good memories.

Anyway, time for bed! It’s late, and you’ve got work tomorrow. Sweet dreams! 🥰😴

Have another scary squid man monster that didn’t make featured, buh-byeee 👋