The Switch: Why I Dumped ChatGPT Plus for Gemini

For a long time, I was a loyal subscriber to ChatGPT Plus. I happily paid the €23.99/month to access the best AI models. But recently, my focus shifted. I’m currently optimizing my finances to invest more in index ETFs and aim for early retirement (FIRE). Every Euro counts.

That’s when I stumbled upon a massive opportunity: Gemini Advanced.

I managed to snag a promotional deal for Gemini Advanced at just €8.99/month. That is nearly 65% cheaper than ChatGPT Plus for a comparable, and in some ways superior, feature set. Multimodal capabilities, huge context windows, and deep Google integration for the price of a sandwich? That is an immediate win for my portfolio.

(Not using AI obviously is not an option anymore in 2026, sorry not sorry)

The Developer Nightmare: Scraping ChatGPT

As a developer, I love automating tasks. With ChatGPT, I built my own “API” to bypass the expensive official token costs. I wrote a script to automate the web interface, but it was a maintenance nightmare.

The ChatGPT website and app seemed to change weekly. Every time they tweaked a div class or a button ID, my script broke. I spent more time fixing my “money-saving” tool than actually using it. It was painful, annoying, and unreliable.

The Python Upgrade: Unlocking Gemini

When I switched to Gemini, I looked for a similar solution and found an open-source gem: Gemini-API by HanaokaYuzu.

This developer has built an incredible, stable Python wrapper for the Gemini Web interface. It pairs perfectly with my new subscription, allowing me to interact with Gemini Advanced programmatically through Python.

I am now paying significantly less money for a cutting-edge AI model that integrates seamlessly into my Python workflows. If you are looking to cut subscriptions without cutting capabilities, it’s time to look at Gemini.

The Setup Guide

How to Set Up Your Python Wrapper

If you want to use the HanaokaYuzu wrapper to mimic the web interface, you will need to grab your session cookies. This effectively “logs in” the script as you.

⚠️ Important Note: This method relies on your browser cookies. If you log out of Google or if the cookies expire, you will need to repeat these steps. For a permanent solution, use the official Gemini API and Google Cloud.

Step 1: Get Your Credentials

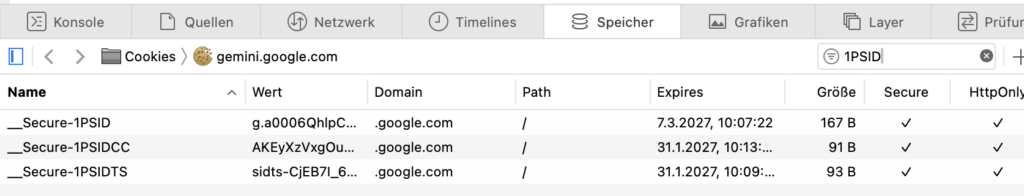

You don’t need a complex API key for this wrapper; you just need to prove you are a human. Here is how to find your __Secure-1PSID and __Secure-1PSIDTS tokens: Copy the long string of characters from the Value column for both.

- Open your browser (Chrome, Firefox, or Edge) and navigate to gemini.google.com.

- Ensure you are logged into the Google account you want to use.

- Open the Developer Tools:

- Windows/Linux: Press

F12orCtrl + Shift + I. - Mac: Press

Cmd + Option + I.

- Windows/Linux: Press

- Navigate to the Application tab (in Chrome/Edge) or the Storage tab (in Firefox).

- Ensure you are logged into the Google account you want to use.

On the left sidebar, expand the Cookies dropdown and select https://gemini.google.com.

Look for the following two rows in the list:

__Secure-1PSID__Secure-1PSIDTS

Step 2: Save the Cookies

Add a .env to your coding workspace:

# Gemini API cookies

SECURE_1PSID=g.a00

SECURE_1PSIDTS=sidts-CjEExamples

Automating Image Generation

We have our cookies, we have our wrapper, and now we are going to build with Nano Banana. This script will hit the Gemini API, request a specific image, and save it locally, all without opening a browser tab.

Here is the optimized, async-ready Python script:

import asyncio

import os

import sys

from pathlib import Path

# Third-party imports

from dotenv import load_dotenv

from gemini_webapi import GeminiClient, set_log_level

from gemini_webapi.constants import Model

# Load environment variables

load_dotenv()

Secure_1PSID = os.getenv("SECURE_1PSID")

Secure_1PSIDTS = os.getenv("SECURE_1PSIDTS")

# Enable logging for debugging

set_log_level("INFO")

def get_client():

"""Initialize the client with our cookies."""

return GeminiClient(Secure_1PSID, Secure_1PSIDTS, proxy=None)

async def gen_and_edit():

# Setup paths

temp_dir = Path("temp")

temp_dir.mkdir(exist_ok=True)

# Import our local watermark remover (see next section)

# We add '.' to sys.path to ensure Python finds the file

sys.path.append('.')

try:

from watermark_remover import remove_watermark

except ImportError:

print("Warning: Watermark remover module not found. Skipping cleanup.")

remove_watermark = None

client = get_client()

await client.init()

prompt = "Generate a photorealistic picture of a ragdoll cat dressed as a baker inside of a bakery shop"

print(f"🎨 Sending prompt: {prompt}")

response = await client.generate_content(prompt)

for i, image in enumerate(response.images):

filename = f"cat_{i}.png"

img_path = temp_dir / filename

# Save the raw image from Gemini

await image.save(path="temp/", filename=filename, verbose=True)

# If we have the remover script, clean the image immediately

if remove_watermark:

print(f"✨ Polishing image: {img_path}")

cleaned = remove_watermark(img_path)

cleaned.save(img_path)

print(f"✅ Done! Saved to: {img_path}")

if __name__ == "__main__":

asyncio.run(gen_and_edit())If you have ever tried running a high-quality image generator (like Flux or SDXL) on your own laptop, you know the pain. You need massive amounts of VRAM, a beefy GPU, and patience. Using Gemini offloads that heavy lifting to Google’s supercomputers, saving your hardware.

But there is a “tax” for this free cloud compute: The Watermark.

Gemini stamps a semi-transparent logo on the bottom right of every image. While Google also uses SynthID (an invisible watermark for AI detection), the visible logo ruins the aesthetic for professional use.

The Fix: Mathematical Cleaning

You might think you need another AI to “paint over” the watermark, but that is overkill. Since the watermark is always the same logo applied with the same transparency, we can use Reverse Alpha Blending.

I found an excellent Python implementation by journey-ad (ported to Python here) that subtracts the known watermark values from the pixels to reveal the original colors underneath.

⚠️ Important Requirement: To run the script below, you must download the alpha map files (bg_48.png and bg_96.png) from the original repository and place them in the same folder as your script.

Here is the cleaning module:

#!/usr/bin/env python3

"""

Gemini Watermark Remover - Python Implementation

Ported from journey-ad/gemini-watermark-remover

"""

import sys

from pathlib import Path

from PIL import Image

import numpy as np

from io import BytesIO

# Ensure bg_48.png and bg_96.png are in this folder!

ASSETS_DIR = Path(__file__).parent

def load_alpha_map(size):

"""Load and calculate alpha map from the background assets."""

bg_path = ASSETS_DIR / f"bg_{size}.png"

if not bg_path.exists():

raise FileNotFoundError(f"Missing asset: {bg_path} - Please download from repo.")

bg_img = Image.open(bg_path).convert('RGB')

bg_array = np.array(bg_img, dtype=np.float32)

# Normalize to [0, 1]

return np.max(bg_array, axis=2) / 255.0

# Cache the maps so we don't reload them every time

_ALPHA_MAPS = {}

def get_alpha_map(size):

if size not in _ALPHA_MAPS:

_ALPHA_MAPS[size] = load_alpha_map(size)

return _ALPHA_MAPS[size]

def detect_watermark_config(width, height):

"""

Gemini uses a 96px logo for images > 1024px,

and a 48px logo for everything else.

"""

if width > 1024 and height > 1024:

return {"logo_size": 96, "margin": 64}

else:

return {"logo_size": 48, "margin": 32}

def remove_watermark(image, verbose=False):

"""

The Magic: Reverses the blending formula:

original = (watermarked - alpha * logo) / (1 - alpha)

"""

# Load image and convert to RGB

if isinstance(image, (str, Path)):

img = Image.open(image).convert('RGB')

elif isinstance(image, bytes):

img = Image.open(BytesIO(image)).convert('RGB')

else:

img = image.convert('RGB')

width, height = img.size

config = detect_watermark_config(width, height)

logo_size = config["logo_size"]

margin = config["margin"]

# Calculate position (Bottom Right)

x = width - margin - logo_size

y = height - margin - logo_size

if x < 0 or y < 0:

return img # Image too small

# Get the math ready

alpha_map = get_alpha_map(logo_size)

img_array = np.array(img, dtype=np.float32)

LOGO_VALUE = 255.0 # The watermark is white

MAX_ALPHA = 0.99 # Prevent division by zero

# Process only the watermark area

for row in range(logo_size):

for col in range(logo_size):

alpha = alpha_map[row, col]

# Skip noise

if alpha < 0.002: continue

alpha = min(alpha, MAX_ALPHA)

# Apply the reverse blend to R, G, B channels

for c in range(3):

pixel_val = img_array[y + row, x + col, c]

restored = (pixel_val - alpha * LOGO_VALUE) / (1.0 - alpha)

img_array[y + row, x + col, c] = max(0, min(255, round(restored)))

return Image.fromarray(img_array.astype(np.uint8), 'RGB')

# Main block for CLI usage

if __name__ == "__main__":

if len(sys.argv) < 2:

print("Usage: python remover.py <image_path>")

sys.exit(1)

img_path = Path(sys.argv[1])

result = remove_watermark(img_path, verbose=True)

output = img_path.parent / f"{img_path.stem}_clean{img_path.suffix}"

result.save(output)

print(f"Saved cleaned image to: {output}")You could now build som etching with FastAPI on top of this and have your own image API! Yay.

The “LinkedIn Auto-Pilot” (With Memory)

⚠️ The Danger Zone (Read This First)

Before we look at the code, we need to address the elephant in the room. What we are doing here is technically against the Terms of Service.

When you use a wrapper to automate your personal Google account:

- Session Conflicts: You cannot easily use the Gemini web interface and this Python script simultaneously. They fight for the session state.

- Chat History: This script will flood your Gemini sidebar with hundreds of “New Chat” entries.

- Risk: There is always a non-zero risk of Google flagging the account. Do not use your primary Google account for this.

Now that we are all adults here… let’s build something cool.

The Architecture: Why “Human-in-the-Loop” Matters

I’ve tried fully automating social media before. It always ends badly. AI hallucinates, it gets the tone wrong, or it sounds like a robot.

That is why I built a Staging Environment. My script doesn’t post to LinkedIn. It posts to Flatnotes (my self-hosted note-taking app).

The Workflow:

- Python Script wakes up.

- Loads Memory: Checks

memory.jsonto see what we talked about last week (so we don’t repeat topics). - Generates Content: Uses a heavy-duty system prompt to create a viral post.

- Staging: Pushes the draft to Flatnotes via API.

- Human Review: I wake up, read the note, tweak one sentence, and hit “Post.”

The Code: The “Viral Generator”

This script uses asyncio to handle the network requests and maintains a local JSON database of past topics.

Key Features:

- JSON Enforcement: It forces Gemini to output structured data, making it easy to parse.

- Topic Avoidance: It reads previous entries to ensure fresh content.

- Psychological Prompting: The prompt explicitly asks for “Fear & Gap” and “Thumb-Stoppers” marketing psychology baked into the code.

from random import randint

import time

import aiohttp

import datetime

import json

import os

import asyncio

from gemini_webapi import GeminiClient, set_log_level

from dotenv import load_dotenv

load_dotenv()

# Set log level for debugging

set_log_level("INFO")

MEMORY_PATH = os.path.join(os.path.dirname(__file__), "memory.json")

HISTORY_PATH = os.path.join(os.path.dirname(__file__), "history.json")

FLATNOTES_API_URL = "https://flatnotes.notarealdomain.de/api/notes/LinkedIn"

FLATNOTES_USERNAME = os.getenv("FLATNOTES_USERNAME")

FLATNOTES_PASSWORD = os.getenv("FLATNOTES_PASSWORD")

Secure_1PSID = os.getenv("SECURE_1PSID")

Secure_1PSIDTS = os.getenv("SECURE_1PSIDTS")

async def post_to_flatnotes(new_post):

"""

Fetches the current note, prepends the new post, and updates the note using Flatnotes API with basic auth.

"""

if not FLATNOTES_USERNAME or not FLATNOTES_PASSWORD:

print(

"[ERROR] FLATNOTES_USERNAME or FLATNOTES_PASSWORD is not set in .env. Skipping Flatnotes update."

)

return

token_url = "https://notes.karlcloud.de/api/token"

async with aiohttp.ClientSession() as session:

# 1. Get bearer token

token_payload = {"username": FLATNOTES_USERNAME, "password": FLATNOTES_PASSWORD}

async with session.post(token_url, json=token_payload) as token_resp:

if token_resp.status != 200:

print(f"[ERROR] Failed to get token: {token_resp.status}")

return

token_data = await token_resp.json()

access_token = token_data.get("access_token")

if not access_token:

print("[ERROR] No access_token in token response.")

return

headers = {"Authorization": f"Bearer {access_token}"}

# 2. Get current note content

async with session.get(FLATNOTES_API_URL, headers=headers) as resp:

if resp.status == 200:

try:

data = await resp.json()

current_content = data.get("content", "")

except aiohttp.ContentTypeError:

# Fallback: treat as plain text

current_content = await resp.text()

else:

current_content = ""

# Prepend new post

updated_content = f"{new_post}\n\n---\n\n" + current_content

patch_payload = {"newContent": updated_content}

async with session.patch(

FLATNOTES_API_URL, json=patch_payload, headers=headers

) as resp:

if resp.status not in (200, 204):

print(f"[ERROR] Failed to update Flatnotes: {resp.status}")

else:

print("[INFO] Flatnotes updated successfully.")

def save_history(new_json):

arr = []

if os.path.exists(HISTORY_PATH):

try:

with open(HISTORY_PATH, "r", encoding="utf-8") as f:

arr = json.load(f)

if not isinstance(arr, list):

arr = []

except Exception:

arr = []

arr.append(new_json)

with open(HISTORY_PATH, "w", encoding="utf-8") as f:

json.dump(arr, f, ensure_ascii=False, indent=2)

return arr

def load_memory():

if not os.path.exists(MEMORY_PATH):

return []

try:

with open(MEMORY_PATH, "r", encoding="utf-8") as f:

data = json.load(f)

if isinstance(data, list):

return data

return []

except Exception:

return []

def save_memory(new_json):

arr = load_memory()

arr.append(new_json)

arr = arr[-3:] # Keep only last 3

with open(MEMORY_PATH, "w", encoding="utf-8") as f:

json.dump(arr, f, ensure_ascii=False, indent=2)

return arr

def get_client():

return GeminiClient(Secure_1PSID, Secure_1PSIDTS, proxy=None)

def get_current_date():

return datetime.datetime.now().strftime("%d. %B %Y")

async def example_generate_content():

client = get_client()

await client.init()

chat = client.start_chat(model="gemini-3.0-pro")

memory_entries = load_memory()

memory_str = ""

if memory_entries:

memory_str = "\n\n---\nVergangene LinkedIn-Posts (letzte 3):\n" + "\n".join(

[

json.dumps(entry, ensure_ascii=False, indent=2)

for entry in memory_entries

]

)

prompt = (

"""

**Role:** Du bist ein weltklasse LinkedIn-Strategist (Top 1% Creator) und Verhaltenspsychologe.

**Mission:** Erstelle einen viralen LinkedIn-Post, kurz knapp auf den punkt, denn leute lesen nur wenig und kurz, der mich als die unangefochtene Autorität für Cybersecurity & AI Governance in der DACH-Region etabliert.

**Ziel:** Maximale Reichweite (100k Follower Strategie) + direkte Lead-Generierung für "https://karlcom.de" (High-Ticket Consulting).

**Output Format:** Ausschließlich valides JSON.

**Datum:** Heute ist der """

+ str(get_current_date())

+ """ nutze nur brand aktuelle Themen.

**PHASE 1: Deep Intelligence (Google Search)**

Nutze Google Search. Suche nach "Trending News Cybersecurity AI Cloud EU Sovereignty last 24h".

Finde den "Elephant in the room" – das Thema, das C-Level Manager (CISO, CTO, CEO) gerade nachts wach hält, über das aber noch keiner Tacheles redet.

* *Fokus:* Große Schwachstellen, Hackerangriffe, Datenleaks, AI, Cybersecurity, NIS2-Versäumnisse, Shadow-AI Datenlecks, Cloud-Exit-Szenarien.

* *Anforderung:* Es muss ein Thema mit finanziellem oder strafrechtlichem Risiko sein.

**PHASE 2: Die "Viral Architecture" (Konstruktion)**

Schreibe den Post auf DEUTSCH. Befolge strikt diese 5-Stufen-Matrix für Viralität:

**1. The "Thumb-Stopper" (Der Hook - Zeile 1-2):**

* Keine Fragen ("Wussten Sie...?").

* Keine Nachrichten ("Heute wurde Gesetz X verabschiedet").

* **SONDERN:** Ein harter Kontrarian-Standpunkt oder eine unbequeme Wahrheit.

* *Stil:* "Ihr aktueller Sicherheitsplan ist nicht nur falsch. Er ist fahrlässig."

* *Ziel:* Der Leser spürt einen körperlichen Impuls, weiterzulesen.

**2. The "Fear & Gap" (Die Agitation):**

* Erkläre die Konsequenz der News aus Phase 1.

* Nutze "Loss Aversion": Zeige auf, was sie verlieren (Geld, Reputation, Job), wenn sie das ignorieren.

* Nutze kurze, rhythmische Sätze (Staccato-Stil). Das erhöht die Lesegeschwindigkeit massiv.

**3. The "Authority Bridge" (Die Wende):**

* Wechsle von Panik zu Kompetenz.

* Zeige auf, dass blinder Aktionismus jetzt falsch ist. Man braucht Strategie.

* Hier etablierst du deinen Status: Du bist der Fels in der Brandung.

**4. The "Soft Pitch" (Die Lösung):**

* Biete **Karlcom.de** als exklusive Lösung an. Nicht betteln ("Wir bieten an..."), sondern feststellen:

* *Wording:* "Das ist der Standard, den wir bei Karlcom.de implementieren." oder "Deshalb rufen uns Vorstände an, wenn es brennt."

**5. The "Engagement Trap" (Der Schluss):**

* Stelle eine Frage, die man nicht mit "Ja/Nein" beantworten kann, sondern die eine Meinung provoziert. (Treibt den Algorithmus).

* Beende mit einem imperativen CTA wie zum Beispiel: "Sichern wir Ihre Assets."

**PHASE 3: Anti-AI & Status Checks**

* **Verbotene Wörter (Sofortiges Disqualifikations-Kriterium):** "entfesseln", "tauchen wir ein", "nahtlos", "Gamechanger", "In der heutigen Welt", "Synergie", "Leuchtturm".

* **Verbotene Formatierung:** Keine **fetten** Sätze (wirkt werblich). Keine Hashtag-Blöcke > 3 Tags.

* **Emojis:** Maximal 2. Nur "Status-Emojis" (📉, 🛑, 🔒, ⚠️). KEINE Raketen 🚀.

**PHASE 4: JSON Output**

Erstelle das JSON. Der `post` String muss `\n` für Zeilenumbrüche nutzen.

**Output Schema:**

```json

{

"analyse": "Kurze Erklärung, warum dieses Thema heute viral gehen wird (Psychologischer Hintergrund).",

"thema": "Titel des Themas",

"source": "Quelle",

"post": "Zeile 1 (Thumb-Stopper)\n\nZeile 2 (Gap)\n\nAbsatz (Agitation)...\n\n(Authority Bridge)...\n\n(Pitch Karlcom.de)...\n\n(Engagement Trap)"

}

**Context für vergangene Posts, diese Themen solltest du erstmal vermeiden:**\n\n"""

+ memory_str

)

response = await chat.send_message(prompt.strip())

previous_session = chat.metadata

max_attempts = 3

newest_post_str = None

def format_flatnotes_post(json_obj):

heading = f"# {json_obj.get('thema', '').strip()}\n"

analyse = json_obj.get('analyse', '').strip()

analyse_block = f"\n```psychology\n{analyse}\n```\n" if analyse else ""

post = json_obj.get('post', '').strip()

source = json_obj.get('source', '').strip()

source_block = f"\nQuelle: {source}" if source else ""

return f"{heading}{analyse_block}\n{post}{source_block}"

for attempt in range(max_attempts):

try:

text = response.text.strip()

if text.startswith("```json"):

text = text[7:].lstrip()

if text.endswith("```"):

text = text[:-3].rstrip()

json_obj = json.loads(text)

save_memory(json_obj)

save_history(json_obj)

newest_post_str = format_flatnotes_post(json_obj)

break

except Exception:

print(response.text)

print("- output was not valid json, retrying...")

if attempt < max_attempts - 1:

previous_chat = client.start_chat(metadata=previous_session)

response = await previous_chat.send_message(

f"ENSURE PROPER JSON OUTPUT!\n\n{prompt}"

)

else:

print("[ERROR] Failed to get valid JSON response after 3 attempts.")

# Post to Flatnotes if we have a valid post

if newest_post_str:

await post_to_flatnotes(newest_post_str)

async def main():

await example_generate_content()

if __name__ == "__main__":

for i in range(50):

asyncio.run(main())

time.sleep(randint(60, 300)) # Wait between 1 to 5 minutes before next run

The Result (Case Study)

Real-World Example: The “Ethics & Liability” Angle

To prove this isn’t just generating generic corporate fluff, let’s look at a raw output from a simulation run.

I set the internal date to January 31, 2026 (a future scenario regarding EU regulations) and asked the AI to find the “Elephant in the room” regarding Cybersecurity.

The AI didn’t choose a technical topic like “Firewalls.” It chose Personal Liability. It identified that C-Level executives are terrified of going to jail or losing personal assets due to negligence.

Here is the raw JSON output from the script. Pay attention to the analyse field, this is the AI “thinking” before it writes.

{

"analyse": "Psychologischer Hebel: 'Personal Accountability' & 'Optimism Bias'. C-Level-Führungskräfte leben oft in der Illusion, Cyber-Risiken durch die Einstellung eines CISO vollständig an diesen delegiert zu haben (Moral Hazard). Der 'Elephant in the room' am 31.01.2026 ist das Ende der Schonfrist für die NIS2-Umsetzung...",

"thema": "Der CISO-Irrtum: Warum Delegation Sie jetzt ins Gefängnis bringt",

"source": "EU NIS2 Enforcement Reports Q1 2026 / Handelsblatt",

"post": "Ihr CISO ist nicht Ihre Versicherung.\nEr ist Ihr potenzieller Belastungszeuge.\n\nDer Irrglaube vieler Vorstände hat im Januar 2026 ein jähes Ende gefunden.\n\nDie erste Welle der NIS2-Prüfungen rollt durch die DACH-Region.\nUnd die Aufsichtsbehörden suchen nicht nach technischen Fehlern.\nSie suchen nach 'Organisationsverschulden'.\n\nDas Szenario:\nEin Ransomware-Angriff legt die Produktion lahm.\nDer CEO sagt: 'Ich habe doch einen Top-CISO eingestellt. Das war sein Job.'\n\nDer Richter sagt: 'Nein. Die Überwachungspflicht ist nicht delegierbar.'\n\nWenn Sie Cyber-Resilienz als reine IT-Aufgabe behandeln, handeln Sie grob fahrlässig.\nDer CISO liefert die Technik.\nAber *Sie* liefern die Governance.\n\nWenn Sie das Risikoprofil Ihrer eigenen Supply Chain nicht ohne PowerPoint erklären können, haften Sie.\nNicht die GmbH.\nSie persönlich.\nMit Ihrem Privatvermögen.\n\n[...]\n\nSichern wir Ihre Assets. ⚖️"

}

Why This Post Works (The Anatomy)

This output demonstrates exactly why we use the “Human-in-the-Loop” architecture with Flatnotes. The AI followed the 5-step viral matrix perfectly:

- The Hook:“Ihr CISO ist nicht Ihre Versicherung. Er ist Ihr potenzieller Belastungszeuge.”

- It attacks a common belief immediately. It’s controversial and scary.

- The Agitation: It creates a specific scenario (Courtroom, Judge vs. CEO). It uses the psychological trigger of Loss Aversion (“Mit Ihrem Privatvermögen” / “With your private assets”).

- The Authority Bridge: It stops the panic by introducing a clear concept: “Executive-Shield Standard.”

- The Tone: It avoids typical AI words like “Synergy” or “Landscape.” It is short, punchy, and uses a staccato rhythm.

Summary

By combining Gemini’s 2M Context Window (to read news) with Python Automation (to handle the logic) and Flatnotes (for human review), we have built a content engine that doesn’t just “write posts”—it thinks strategically.

It costs me pennies in electricity, saves me hours of brainstorming, and produces content that is arguably better than 90% of the generic posts on LinkedIn today.

The Verdict

From Consumer to Commander

We started this journey with a simple goal: Save €15 a month by cancelling ChatGPT Plus. But we ended up with something much more valuable.

By switching to Gemini Advanced and wrapping it in Python, we moved from being passive consumers of AI to active commanders.

- We built a Nano Banana Image Generator that bypasses the browser and cleans up its own mess (watermarks).

- We engineered a LinkedIn Strategist that remembers our past posts, researches the news, and writes with psychological depth, all while we sleep.

Is This Setup for You?

This workflow is not for everyone. It is “hacky.” It relies on browser cookies that expire. It dances on the edge of Terms of Service.

- Stick to ChatGPT Plus if: …can’t think of a reason, it is sub-par in every way

- Switch to Gemini & Python if: You are a builder. You want to save money and you want to build custom workflows that no off-the-shelf product can offer (for free 😉).

The Final Word on “Human-in-the-Loop”

The most important lesson from our LinkedIn experiment wasn’t the code, it was the workflow. The AI generates the draft, but the Human (you) makes the decision.

Whether you are removing watermarks from a cat picture or approving a post about Cyber-Liability, the magic happens when you use AI to do the heavy lifting, leaving you free to do the creative directing.

Ready to build your own agent?

Happy coding! 🚀