KarlGPT represents my pursuit of true freedom, through AI. I’ve realized that my ultimate life goal is to do absolutely nothing. Unfortunately, my strong work ethic prevents me from simply slacking off or quietly quitting.

This led me to the conclusion that I need to maintain, or even surpass, my current level of productivity while still achieving my dream of doing nothing. Given the advancements in artificial intelligence, this seemed like a solvable problem.

I began by developing APIs to gather all the necessary data from my work accounts and tools. Then, I started working on a local AI model and server to ensure a secure environment for my data.

Now, I just need to fine-tune the entire system, and soon, I’ll be able to automate my work life entirely, allowing me to finally live my dream: doing absolutely nothing.

This is gonna be a highly censored post as it involves certain details about my work I can not legally disclose

Technologies

Django and Django REST Framework (DRF)

Django served as the backbone for the server-side logic, offering a robust, scalable, and secure foundation for building web applications. The Django REST Framework (DRF) made it simple to expose APIs with fine-grained control over permissions, serialization, and views. DRF’s ability to handle both function-based and class-based views allowed for a clean, modular design, ensuring the APIs could scale as the project evolved.

- Django Documentation: https://docs.djangoproject.com

- Django REST Framework Docs: https://www.django-rest-framework.org

Celery Task Queue

To handle asynchronous tasks such as sending emails, performing background computations, and integrating external services (AI APIs), I implemented Celery. Celery provided a reliable and efficient way to manage long-running tasks without blocking the main application. This was critical for tasks like scheduling periodic jobs and processing user-intensive data without interrupting the API’s responsiveness.

- Celery Official Documentation: https://docs.celeryproject.org

React with TypeScript and TailwindCSS

For the frontend, I utilized React with TypeScript for type safety and scalability. TypeScript ensured the codebase remained maintainable as the project grew. Meanwhile, TailwindCSS enabled rapid UI development with its utility-first approach, significantly reducing the need for writing custom CSS. Tailwind’s integration with React made it seamless to create responsive and accessible components.

This is my usual front end stack, usually also paired with Astrojs. I use regular React, no extra framework.

- React Official Docs: https://reactjs.org

- TypeScript Docs: https://www.typescriptlang.org

- TailwindCSS Documentation: https://tailwindcss.com

Vanilla Python

Due to restrictions that prohibited the use of external libraries in local API wrappers, I had to rely on pure Python to implement APIs and related tools. This presented unique challenges, such as managing HTTP requests, data serialization, and error handling manually. Below is an example of a minimal API written without external dependencies:

import re

import json

from http.server import BaseHTTPRequestHandler, HTTPServer

items = {"test": "mewo"}

class ControlKarlGPT(BaseHTTPRequestHandler):

def do_GET(self):

if re.search("/api/helloworld", self.path):

self.send_response(200)

self.send_header("Content-type", "application/json")

self.end_headers()

response = json.dumps(items).encode()

self.wfile.write(response)

else:

self.send_response(404)

self.end_headers()

def run(server_class=HTTPServer, handler_class=ControlKarlGPT, port=8000):

server_address = ("", port)

httpd = server_class(server_address, handler_class)

print(f"Starting server on port http://127.0.0.1:{port}")

httpd.serve_forever()

if __name__ == "__main__":

run()- Python Official Documentation: https://docs.python.org

By weaving these technologies together, I was able to build a robust, scalable system that adhered to the project’s constraints while still delivering a polished user experience. Each tool played a crucial role in overcoming specific challenges, from frontend performance to backend scalability and compliance with restrictions.

File based Cache

To minimize system load, I developed a lightweight caching framework based on a simple JSON file-based cache. Essentially, this required creating a “mini-framework” akin to Flask but with built-in caching capabilities tailored to the project’s needs. While a pull-based architecture—where workers continuously poll the server for new tasks—was an option, it wasn’t suitable here. The local APIs were designed as standalone programs, independent of a central server.

This approach was crucial because some of the tools we integrate lack native APIs or straightforward automation options. By building these custom APIs, I not only solved the immediate challenges of this project (e.g., powering KarlGPT) but also created reusable components for other tasks. These standalone APIs provide a solid foundation for automation and flexibility beyond the scope of this specific system

How it works

The first step was to identify what tasks I perform in the daily and the tools I use for each of them. To automate anything effectively, I needed to abstract these tasks into programmable actions. For example:

- Read Emails

- Respond to Invitations

- Check Tickets

Next, I broke these actions down further to understand the decision-making process behind each. For instance, when do I respond to certain emails, and how do I determine which ones fall under my responsibilities? This analysis led to a detailed matrix that mapped out every task, decision point, and tool I use.

The result? A comprehensive, structured overview of my workflow. Not only did this help me build the automation framework, but it also provided a handy reference for explaining my role. If my boss ever asks, “What exactly do you do here?” I can present the matrix and confidently say, “This is everything.”

As you can see, automating work can be a lot of work upfront—an investment in reducing effort in the future. Ironically, not working requires quite a bit of work to set up! 😂

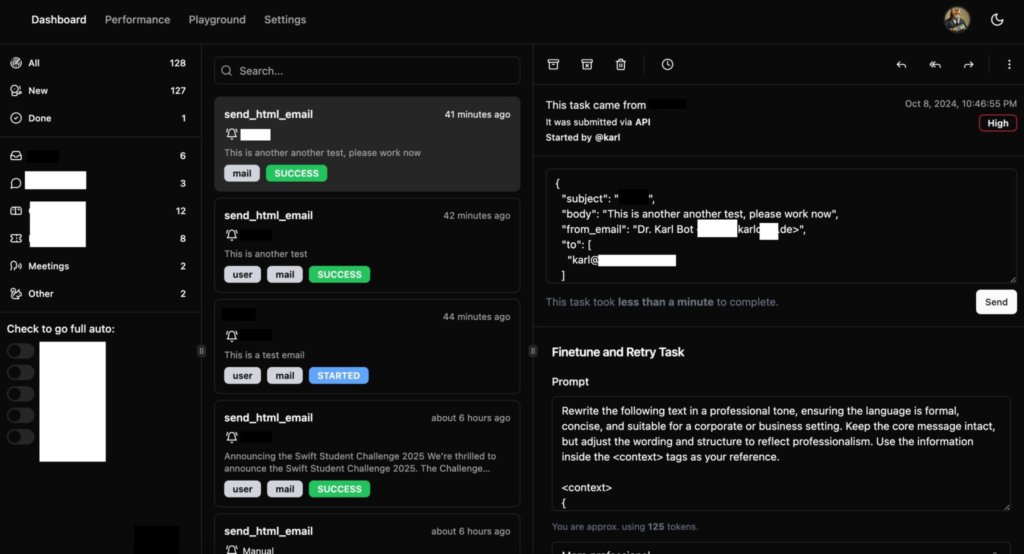

The payoff is a system where tasks are handled automatically, and I have a dashboard to monitor, test, and intervene as needed. It provides a clear overview of all ongoing processes and ensures everything runs smoothly:

AI Magic: Behind the Scenes

The AI processing happens locally using Llama 3, which plays a critical role in removing all personally identifiable information (PII) from emails and text. This is achieved using a carefully crafted system prompt fine-tuned for my specific job and company needs. Ensuring sensitive information stays private is paramount, and by keeping AI processing local, we maintain control over data security.

In most cases, the local AI is fully capable of handling the workload. However, for edge cases where additional computational power or advanced language understanding is required, Claude or ChatGPT serve as backup systems. When using cloud-based AI, it is absolutely mandatory to ensure that no sensitive company information is disclosed. For this reason, the system does not operate in full-auto mode. Every prompt is reviewed and can be edited before being sent to the cloud, adding an essential layer of human oversight.

To manage memory and task tracking, I use mem0 in conjunction with a PostgreSQL database, which acts as the system’s primary “brain” 🧠. This database, structured using Django REST Framework, handles everything from polling for new tasks to storing results. This robust architecture ensures that all tasks are processed efficiently while maintaining data integrity and security.

Conclusion

Unfortunately, I had to skip over many of the intricate details and creative solutions that went into making this system work. One of the biggest challenges was building APIs around legacy tools that lack native automation capabilities. Bringing these tools into the AI age required innovative thinking and a lot of trial and error.

The preparation phase was equally demanding. Breaking down my daily work into a finely detailed matrix took time and effort. If you have a demanding role, such as being a CEO, it’s crucial to take a step back and ask yourself: What exactly do I do? A vague answer like “represent the company” won’t cut it. To truly understand and automate your role, you need to break it down into detailed, actionable components.

Crafting advanced prompts tailored to specific tasks and scenarios was another key part of the process. To structure these workflows, I relied heavily on frameworks like CO-START and AUTOMAT (stay tuned for an upcoming blog post about these).

I even created AI personas for the people I interact with regularly and designed test loops to ensure the responses generated by the AI were accurate and contextually appropriate. While I drew inspiration from CrewAI, I ultimately chose LangChain for most of the complex workflows because its extensive documentation made development easier. For simpler tasks, I used lightweight local AI calls via Ollama.

This project has been an incredible journey of challenges, learning, and innovation. It is currently in an early alpha stage, requiring significant manual intervention. Full automation will only proceed once I receive explicit legal approval from my employer to ensure compliance with all applicable laws, company policies, and data protection regulations.

Legal Disclaimer: The implementation of any automation or AI-based system in a workplace must comply with applicable laws, organizational policies, and industry standards. Before deploying such systems, consult with legal counsel, relevant regulatory bodies, and your employer to confirm that all requirements are met. Unauthorized use of automation or AI may result in legal consequences or breach of employment contracts. Always prioritize transparency, data security, and ethical considerations when working with sensitive information.